"mipsytipsy" / 2025-07-10 3 months ago / 未收藏/ charity.wtf/

发送到 kindle

I’ve never published an essay quite like this. I’ve written about my life before, reams of stuff actually, because that’s how I process what I think, but never for public consumption. I’ve been pushing myself to write more lately because my co-authors and I have a whole fucking book to write between now and October. […]

"Daniel Lemire" / 2025-07-10 3 months ago / 未收藏/ Daniel Lemire's blog/

发送到 kindle

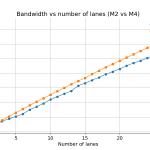

The Apple M2, introduced in 2022, and the Apple M4, launched in 2024, are both ARM-based system-on-chip (SoC) designs featuring unified memory architecture. That is, they use the same memory for both graphics (GPU) and main computations (CPU). The M2 processor relies on LPDDR5 memory whereas the M4 relies on LPDDR5X which should provide slightly … Continue reading Memory-level parallelism :: Apple M2 vs Apple M4

The Apple M2, introduced in 2022, and the Apple M4, launched in 2024, are both ARM-based system-on-chip (SoC) designs featuring unified memory architecture. That is, they use the same memory for both graphics (GPU) and main computations (CPU). The M2 processor relies on LPDDR5 memory whereas the M4 relies on LPDDR5X which should provide slightly … Continue reading Memory-level parallelism :: Apple M2 vs Apple M4"Tobias Bieniek" / 2025-07-12 3 months ago / 未收藏/ The Rust Programming Language Blog/

发送到 kindle

Since our last development update in February 2025, we have continued to make significant improvements to crates.io. In this blog post, we want to give you an update on the latest changes that we have made to crates.io over the past few months.

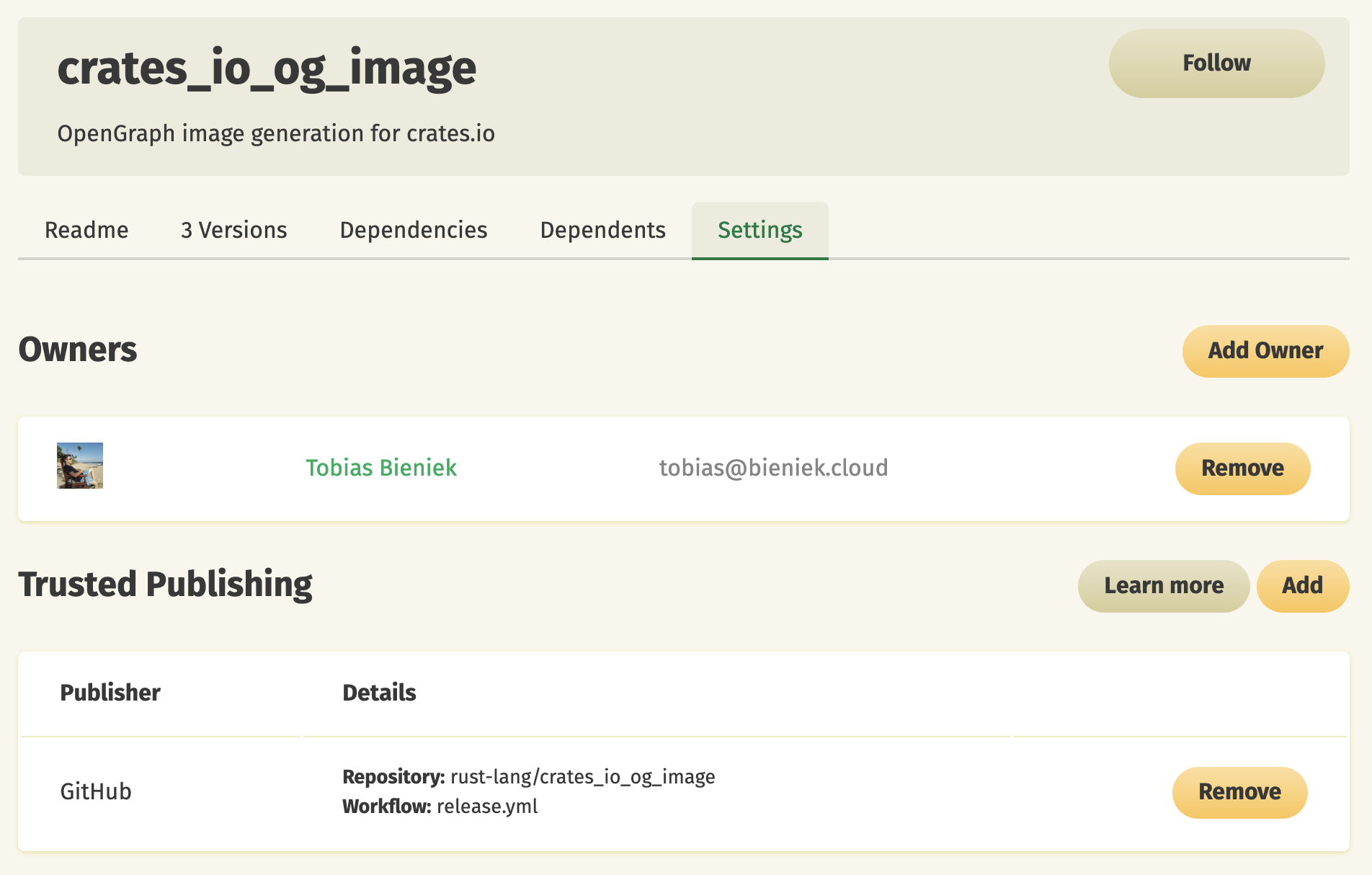

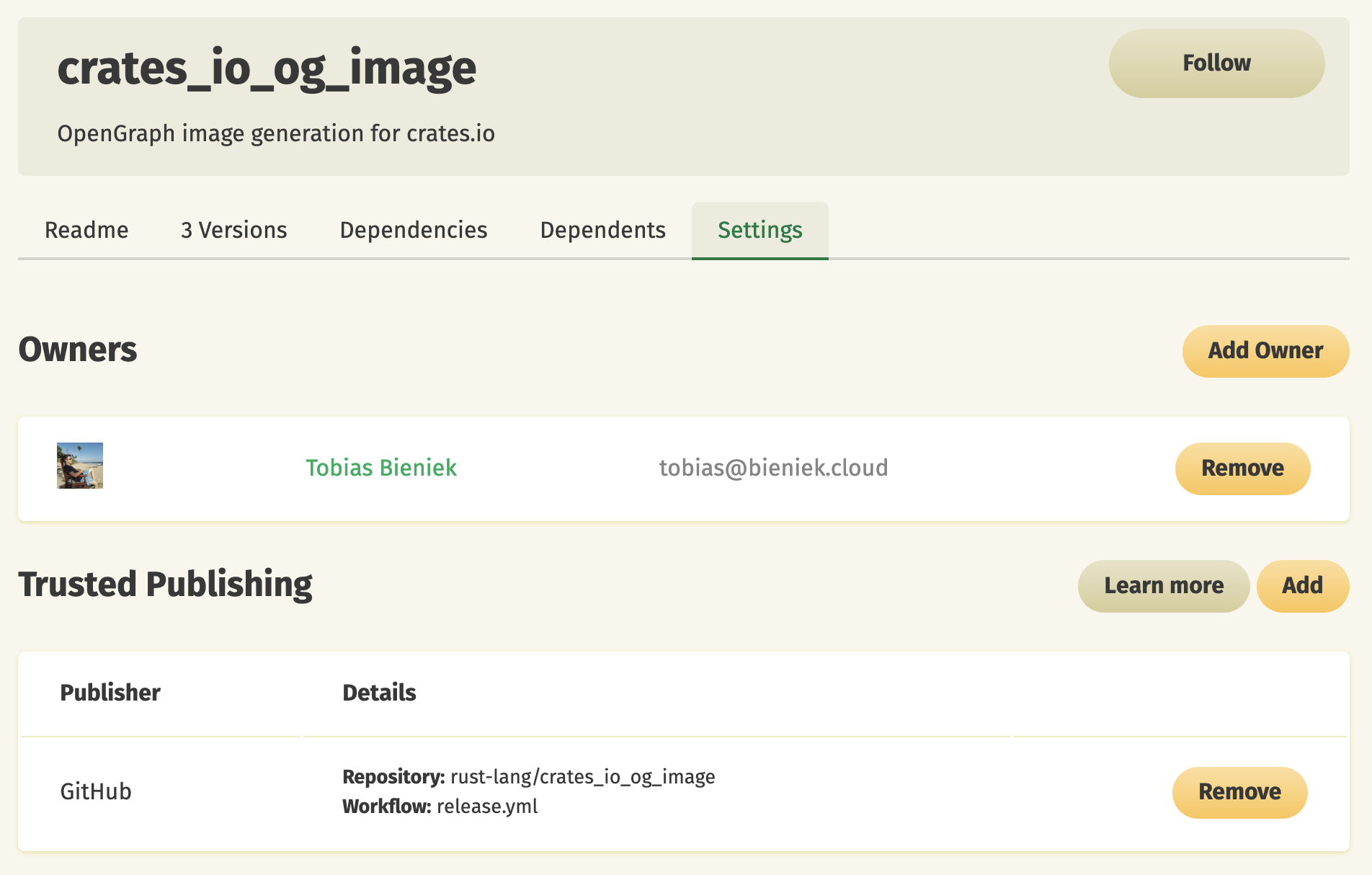

Trusted Publishing eliminates the need for GitHub Actions secrets when publishing crates from your CI/CD pipeline. Instead of managing API tokens, you can now configure which GitHub repository you trust directly on crates.io. That repository is then allowed to request a short-lived API token for publishing in a secure way using OpenID Connect (OIDC). While Trusted Publishing is currently limited to GitHub Actions, we have built it in a way that allows other CI/CD providers like GitLab CI to be supported in the future.

To get started with Trusted Publishing, you'll need to publish your first release manually. After that, you can set up trusted publishing for future releases. The detailed documentation is available at https://crates.io/docs/trusted-publishing.

Here's an example of how to set up GitHub Actions to use Trusted Publishing:

These images include the crate name, keywords, description, latest version (or rather the default version that we show for the crate), number of releases, license, and crate size. This provides much more useful information when crates.io links are shared on social media platforms or in chat applications.

The image generation has been extracted to a dedicated crate: crates_io_og_image (GitHub). We're also adding basic theming support in PR #3 to allow docs.rs to reuse the code for their own OpenGraph images.

Under the hood, the image generation uses two other excellent Rust projects: Typst for layout and text rendering, and oxipng for PNG optimization.

We would like to thank our crates.io team member @eth3lbert for implementing the initial version of this feature in PR #11422.

This enhancement was also implemented by @eth3lbert in PR #11441, building on initial work by @kbdharun.

The new system includes a template inheritance system for consistent branding across all emails. This change also enables us to support HTML emails in the future.

PR #10763 takes advantage of JSONB support in PostgreSQL and their btree ordering specification to implement SemVer sorting on the database side. This reduces the load on our API servers and improves response times for crates with many versions.

Trusted Publishing

We are excited to announce that we have implemented "Trusted Publishing" support on crates.io, as described in RFC #3691. This feature was inspired by the PyPI team's excellent work in this area, and we want to thank them for the inspiration!Trusted Publishing eliminates the need for GitHub Actions secrets when publishing crates from your CI/CD pipeline. Instead of managing API tokens, you can now configure which GitHub repository you trust directly on crates.io. That repository is then allowed to request a short-lived API token for publishing in a secure way using OpenID Connect (OIDC). While Trusted Publishing is currently limited to GitHub Actions, we have built it in a way that allows other CI/CD providers like GitLab CI to be supported in the future.

To get started with Trusted Publishing, you'll need to publish your first release manually. After that, you can set up trusted publishing for future releases. The detailed documentation is available at https://crates.io/docs/trusted-publishing.

Here's an example of how to set up GitHub Actions to use Trusted Publishing:

name: Publish to crates.io

on:

push:

tags: # Triggers when pushing tags starting with 'v'

jobs:

publish:

runs-on: ubuntu-latest

environment: release # Optional: for enhanced security

permissions:

id-token: write # Required for OIDC token exchange

steps:

- uses: actions/checkout@v4

- uses: rust-lang/crates-io-auth-action@v1

id: auth

- run: cargo publish

env:

CARGO_REGISTRY_TOKEN: ${{ steps.auth.outputs.token }}

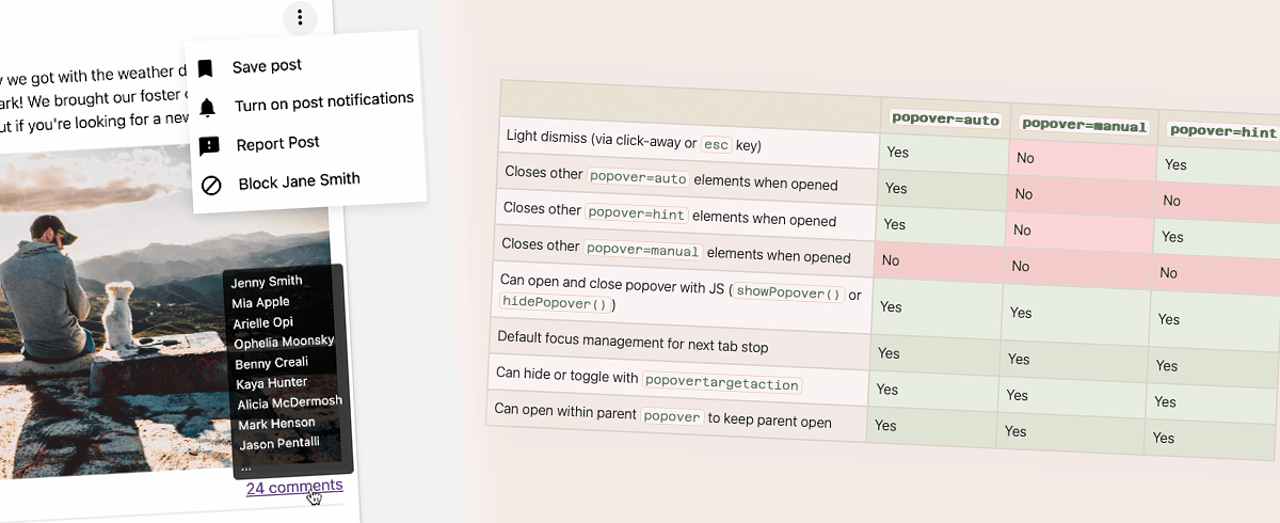

OpenGraph Images

Previously, crates.io used a single OpenGraph image for all pages. We have now implemented dynamic OpenGraph image generation, where each crate has a dedicated image that is regenerated when new versions are published.These images include the crate name, keywords, description, latest version (or rather the default version that we show for the crate), number of releases, license, and crate size. This provides much more useful information when crates.io links are shared on social media platforms or in chat applications.

The image generation has been extracted to a dedicated crate: crates_io_og_image (GitHub). We're also adding basic theming support in PR #3 to allow docs.rs to reuse the code for their own OpenGraph images.

Under the hood, the image generation uses two other excellent Rust projects: Typst for layout and text rendering, and oxipng for PNG optimization.

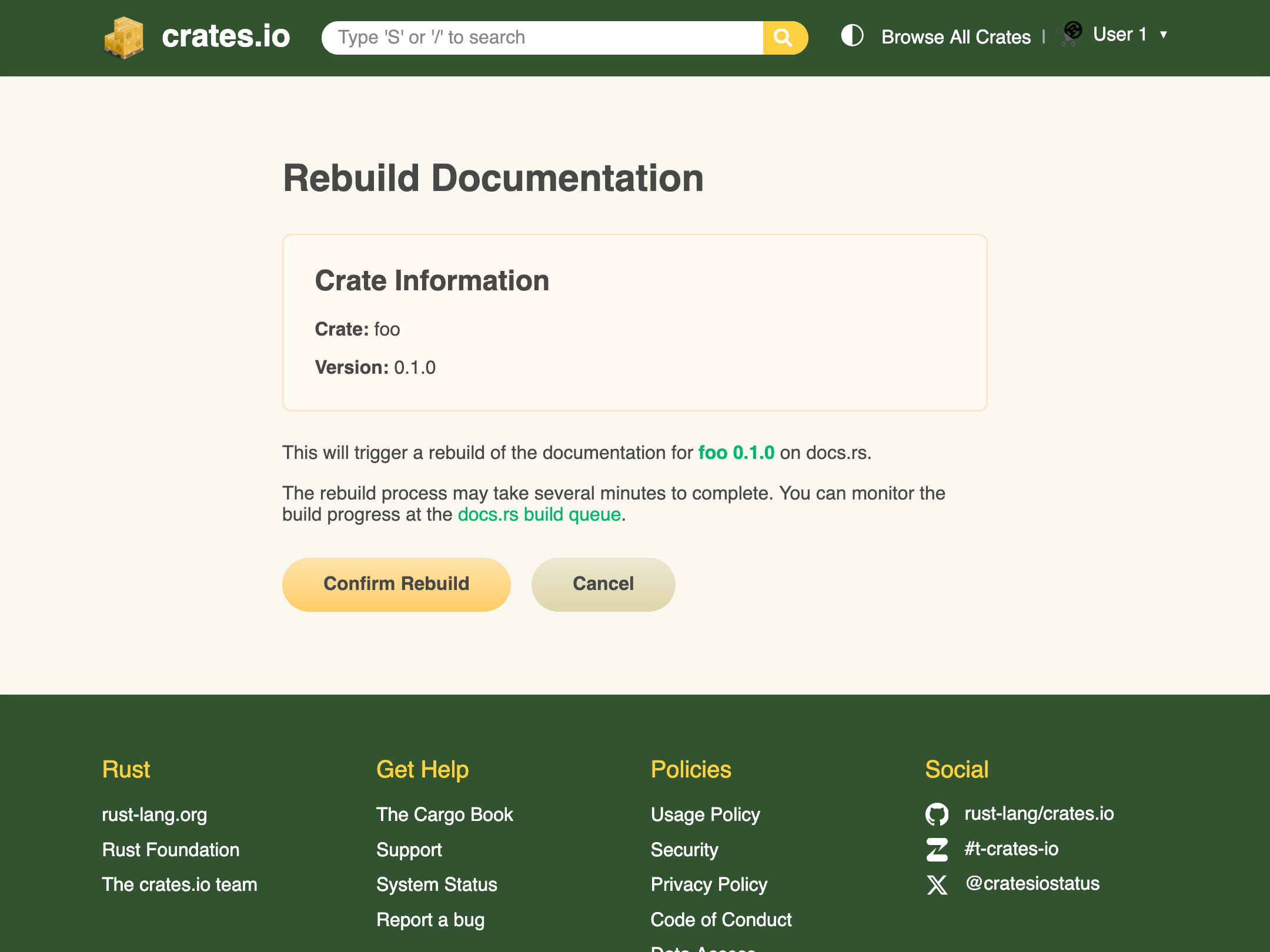

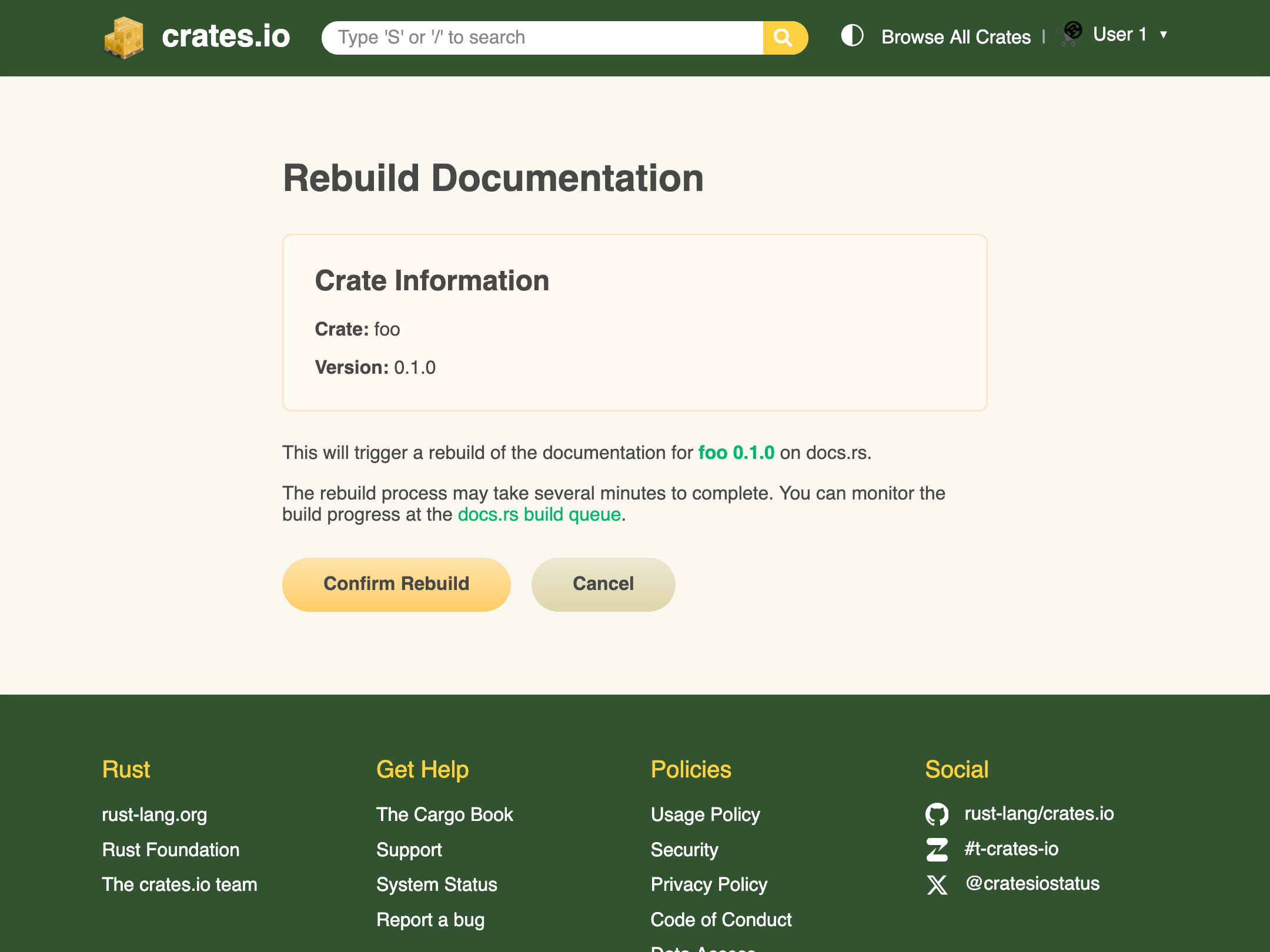

docs.rs rebuilds

Crate owners can now trigger documentation rebuilds for docs.rs directly from the crate's version list on crates.io. This can be useful when docs.rs builds have failed or when you want to take advantage of new docs.rs features without having to publish a new release just for that.

We would like to thank our crates.io team member @eth3lbert for implementing the initial version of this feature in PR #11422.

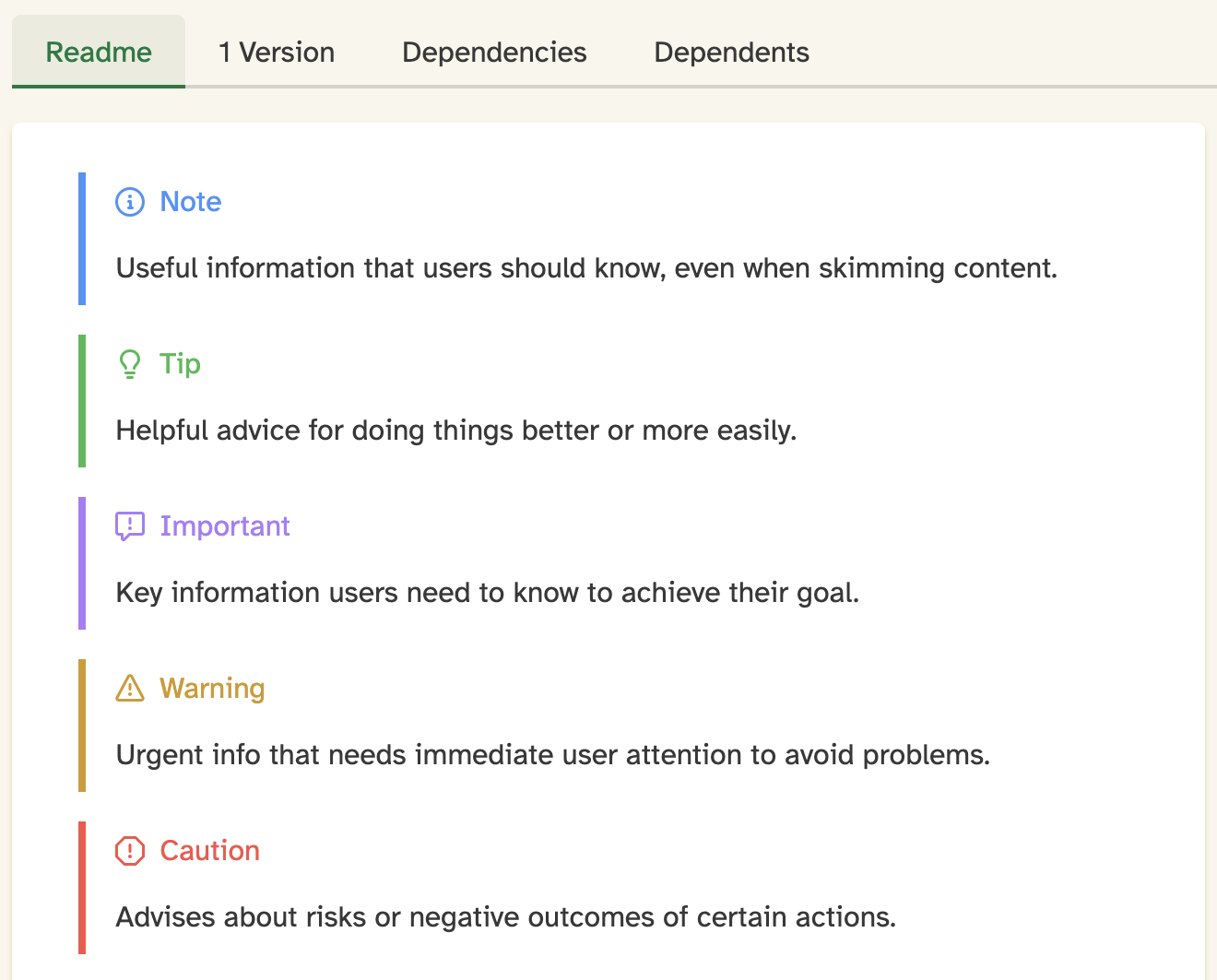

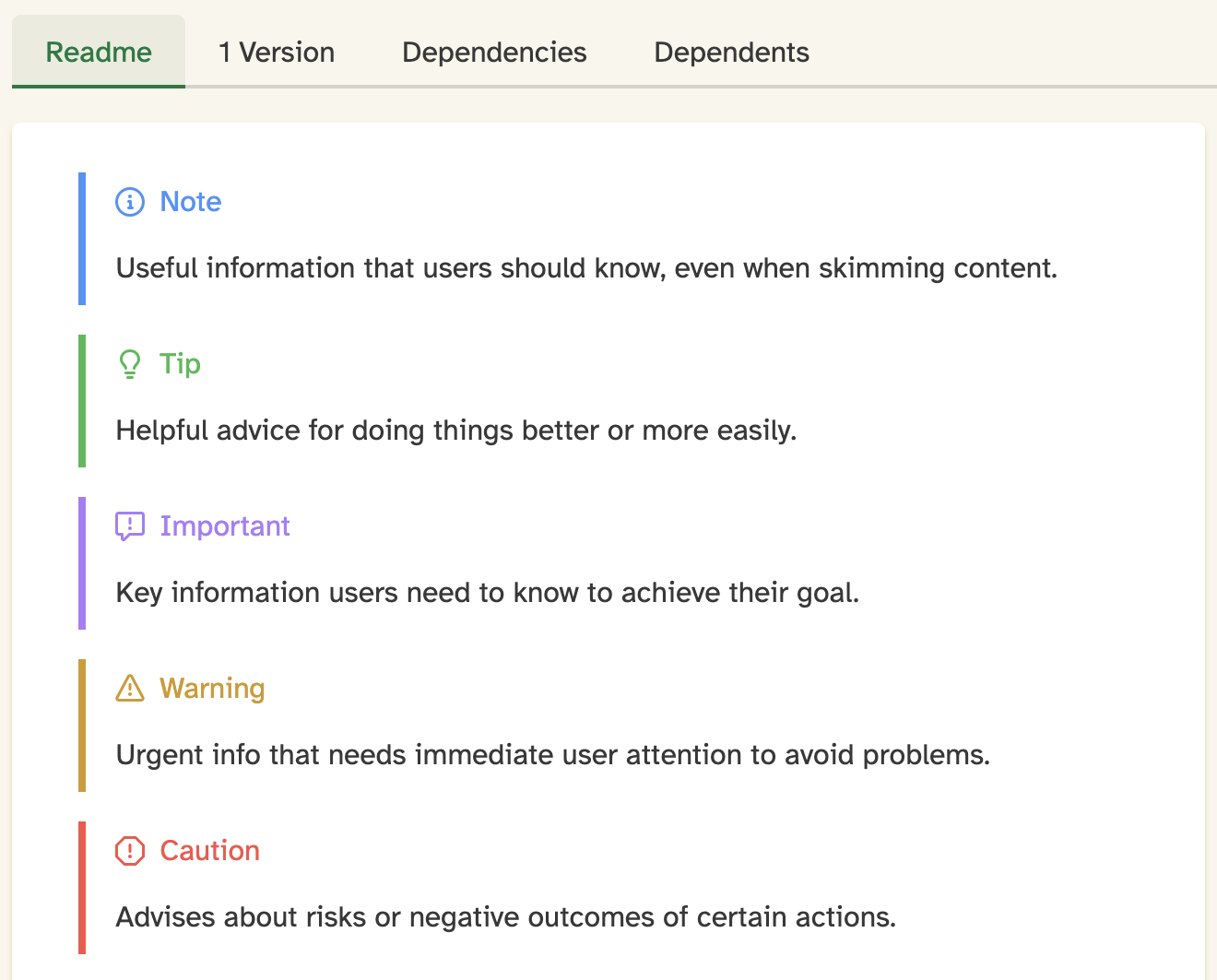

README alert support

We've added support for rendering GitHub-style alerts in README files. This feature allows crate authors to use alert blocks like> [!NOTE], > [!WARNING], and > [!CAUTION] in their README markdown, which will now be properly styled and displayed on crates.io.

This enhancement was also implemented by @eth3lbert in PR #11441, building on initial work by @kbdharun.

Miscellaneous

These were some of the more visible changes to crates.io over the past couple of months, but a lot has happened "under the hood" as well. Here are a couple of examples:Email system refactoring

Previously, we used theformat!() macro and string concatenation to create emails, which made them hard to maintain and inconsistent in styling. We have migrated to the minijinja crate and now use templates instead.The new system includes a template inheritance system for consistent branding across all emails. This change also enables us to support HTML emails in the future.

SemVer sorting optimization

Previously, we had to load all versions from the database and sort them by SemVer on the API server, which was inefficient for crates with many versions. Our PostgreSQL provider did not support the semver extension, so we had to implement sorting in application code.PR #10763 takes advantage of JSONB support in PostgreSQL and their btree ordering specification to implement SemVer sorting on the database side. This reduces the load on our API servers and improves response times for crates with many versions.

Feedback

We hope you enjoyed this update on the development of crates.io. If you have any feedback or questions, please let us know on Zulip or GitHub. We are always happy to hear from you and are looking forward to your feedback!"Codecademy Team" / 2025-07-09 3 months ago / 未收藏/ Codecademy Blog/

发送到 kindle

We’ve made some exciting updates to Codecademy Pro to help you take your career to the next level. As part of Pro, you now have access to 36 intermediate and advanced learning paths, featuring certifications like AWS Certified Machine Learning – AI Specialty, Azure Security, and Google Cloud Architect. On top of that, we’ve rolled out new skill-based paths that focus on mastering popular tools like Snowflake, Terraform, and generative AI.

These new paths go far beyond the basics. With curriculum designed by industry experts, you’ll gain the practical knowledge and expertise needed to ace critical tech credentials and open doors to exciting career opportunities. You’ll even get to practice with test questions that mimic actual industry certification exams. Pro members can start using these prep materials for no extra cost — start a free trial today.

And here’s something fresh: these paths are video-based with interactive features, and brought to you by Skillsoft, the learning company that we’re proud to call home. That means you’ll get to learn in a dynamic, engaging way that keeps things interesting while giving you real-world practice.

Picture your entire team learning together, tackling hand-picked courses like IT Automation with Generative AI, or prepping for AWS certifications in unison. Teams memberships are a great way to ensure everyone works cohesively, speaks a shared technical language, and applies newly acquired knowledge consistently. Start a free 14-day trial of Codecademy Teams today.

When you’re ready, we’ll even guide you to schedule your actual industry exam with a third-party provider. The process couldn’t be smoother.

For those looking to gain skills rather than certifications, we’ve got you covered, too. Our newly launched skill-based paths focus on building practical capabilities through applied projects. For example, data professionals can expand their expertise with paths like Data Analytics with Snowflake and Advanced Snowflake. Software developers and QA teams might find courses like Automated Testing with Selenium or Terraform Expedition: Exploring Infrastructure as Code particularly helpful for tackling workflow optimization and project automation.

Start exploring what’s new today and take the first step toward unlocking your next chapter of professional growth!

document.addEventListener('DOMContentLoaded', function() {

const isFeatured = !![...document.querySelectorAll('.category-tag')].find(t => t.innerText == 'Featured');

const swiper = new Swiper('#block_684333f1be2727c528581643d69278d9 .swiper', {

direction: 'horizontal',

loop: false,

slidesPerView: 1,

slidesPerGroup: 1,

spaceBetween : 20,

// loopAdditionalSlides : 4,

navigation: {

nextEl: '.swiper-button-next-block_684333f1be2727c528581643d69278d9',

prevEl: '.swiper-button-prev-block_684333f1be2727c528581643d69278d9',

},

breakpoints: {

768: {

pagination: false,

slidesPerView: 2,

slidesPerGroup: 2,

spaceBetween : 40,

},

[isFeatured ? 1440 : 1128]: {

pagination: false,

slidesPerView: 3,

slidesPerGroup: 3,

spaceBetween : 40,

}

}

});

});

The post Introducing Even More Learning Paths for Certification Prep & Skills Training appeared first on Codecademy Blog.

These new paths go far beyond the basics. With curriculum designed by industry experts, you’ll gain the practical knowledge and expertise needed to ace critical tech credentials and open doors to exciting career opportunities. You’ll even get to practice with test questions that mimic actual industry certification exams. Pro members can start using these prep materials for no extra cost — start a free trial today.

And here’s something fresh: these paths are video-based with interactive features, and brought to you by Skillsoft, the learning company that we’re proud to call home. That means you’ll get to learn in a dynamic, engaging way that keeps things interesting while giving you real-world practice.

Who are these paths right for?

Not sure if these paths are right for you? Here’s who we had in mind:- Mid-career professionals: If you’re looking to expand your current skill set or stay competitive in a fast-changing field, these paths are built to help you level up.

- Team leaders: If you’re preparing your staff to tackle cutting-edge tools, like AI-powered technologies, our new paths can help everyone on your team get up to speed together.

- Specialists in IT, DevOps, or data: For those aiming to advance in technical domains, these skill paths deliver step-by-step guidance to master advanced tools and concepts.

Learning together with Codecademy Teams

Thinking of upskilling as a team? A Codecademy Teams membership could be the perfect solution. With Teams, your group will gain access to the full catalog of over 600 courses and paths, including these new certification prep options. Codecademy Teams offers flexibility as your team evolves, with customizable learning paths, progress tracking, robust analytics, and unlimited seat reassignment.Picture your entire team learning together, tackling hand-picked courses like IT Automation with Generative AI, or prepping for AWS certifications in unison. Teams memberships are a great way to ensure everyone works cohesively, speaks a shared technical language, and applies newly acquired knowledge consistently. Start a free 14-day trial of Codecademy Teams today.

What will you learn from the new paths?

The new certification paths are directly aligned with the exams from industry-leading organizations like AWS, Google Cloud, and Microsoft Azure. Each curriculum is tailored to help you meet certification objectives, equipping you with all the knowledge you’ll need to pass your exam. These paths also include realistic practice exams, helping you build confidence and identify areas where extra review might be needed.When you’re ready, we’ll even guide you to schedule your actual industry exam with a third-party provider. The process couldn’t be smoother.

For those looking to gain skills rather than certifications, we’ve got you covered, too. Our newly launched skill-based paths focus on building practical capabilities through applied projects. For example, data professionals can expand their expertise with paths like Data Analytics with Snowflake and Advanced Snowflake. Software developers and QA teams might find courses like Automated Testing with Selenium or Terraform Expedition: Exploring Infrastructure as Code particularly helpful for tackling workflow optimization and project automation.

Explore what’s new

No matter where you are in your professional or technical development, our expanded Codecademy Pro and Teams offerings are here to help you push boundaries, master new technologies, and bring your career ambitions closer within reach. With expertly designed curriculums, hands-on projects, and comprehensive certification preparation, you’ll have everything you need to stay ahead in today’s rapidly evolving tech landscape.Start exploring what’s new today and take the first step toward unlocking your next chapter of professional growth!

Related courses

9 courses

"Cory Stieg" / 2025-07-10 3 months ago / 未收藏/ Codecademy Blog/

发送到 kindle

This past year, the job market saw 514,359 listings from public and private employers, all hunting for cybersecurity specialists or tech talent with serious cybersecurity skills.

As tech continues to transform our lives, more people are needed to defend these technologies and keep up with their advancements, says Okey Obudulu, Chief Information Security Officer (CISO) for Skillsoft, the company that Codecademy is proud to be part of. “The blatant attacks on companies’ networks have led more and more companies to really get serious around the demand for cybersecurity professionals,” he says.

Cybersecurity is an umbrella term for protecting computer systems, networks, people, and organizations from online threats and bad actors. The roles within a cybersecurity team range from Penetration Testers who hack into an organization’s network to uncover vulnerabilities, to Incident Response Analyst who are essentially detectives who solve crimes with digital data.

A lot of cybersecurity professionals get their start in other technical jobs, like Software Developer or Network Administrator, explains Ricki Burke, founder of CyberSec People, a global cybersecurity recruitment and staffing company. With the right cybersecurity skills on your resume, you can feel confident about breaking into the industry.

Curious which skills cybersecurity recruiters are looking for in job-seekers? Here are the programming languages, technical capabilities, and marketable skills you should know about to get a job in cybersecurity.

When you’re ready to start learning, take a look at our updated cybersecurity catalog with 30+ free courses that teach you foundational cybersecurity skills. These video-based courses are a great way to prepare for popular cybersecurity certifications.

We also recently added new certification paths that are built around the exams from top industry leaders like AWS, Google Cloud, and Microsoft Azure. Each curriculum is designed to get you exam-ready, with realistic practice tests to boost your confidence and pinpoint areas for review. When you’re set, we’ll even guide you through scheduling your certification exam.

Not chasing certifications? No problem. Our skill-based paths focus on hands-on learning through real-world projects. Whether it’s mastering Data Analytics with Snowflake or automating workflows with courses like Automated Testing with Selenium, there’s something here to help you level up.

The role of a Security Engineer is a lot like a Software Engineer, so coding and scripting knowledge is a must-have, Ricki says. It’s pretty common for developers to pick up some security-specific skills and then transition into the cybersecurity space, he says.

The more technical skills you grasp, the more opportunities you’ll have to contribute across a broader domain, Okey says. If you’re just starting your coding journey and wondering what you should learn, these are the programming languages that are used in cybersecurity:

Mature companies with robust cybersecurity teams often hire Threat Hunters to comb sources and accumulate “threat intelligence,” Ricki says. These people need to know how to gather intelligence and analyze it using techniques like packet-sniffing. In our course Introduction to Ethical Hacking, you’ll learn about the tools used in packet-sniffing and get to practice using the network analysis tool TCPDump to perform packet analysis.

In order to be successful in these types of roles, you need to have an in-depth understanding of not only the technology used to find threats, but also how your findings correlate to the industry at large. “Business context is fundamental,” Ricki says. “You can be the best hacker in the world, but if you can’t explain what the vulnerabilities actually mean for business, then it means nothing.”

People in cybersecurity use Python to automate lots of tasks, like pentesting and SOC analysis. In a malware analysis, for example, Python can be used to automate the process of searching through files and ports. Want to learn Python? Our Python courses cover everything from machine learning to data analytics.

Larger companies typically have Governance, Risk, and Compliance (aka “GRC”) specialists within information security teams, Ricki says. These folks are responsible for establishing security frameworks, policies, and guidelines that protect an environment. For example, someone in a GRC role might train team members in specific security protocols, as well as assess the security risk of third-party vendors that an organization wants to use.

In certain sectors, like healthcare or finance, companies are looking for GRC professionals who have backgrounds in the field, because they have a high-level comprehension of the business’ broader needs, Ricki explains. “Say you’re a nurse who wants to become a security person: You know way more than some outsider coming into the business who has never worked in healthcare before,” he says. Don’t hesitate to leverage your past work experience or industry knowledge, because it could help you stand out in a pool of applicants.

If certifications are your goal, our certification paths align with exams from leading organizations like AWS, Google Cloud, and Microsoft Azure. These courses prep you for the tests and also include realistic practice exams to build your confidence. When you’re ready, we’ll help guide you through scheduling your industry exam. Prefer focusing on hands-on skills instead? Check out our skill-based paths, like Data Analytics with Snowflake or Automated Testing with Selenium, to boost your expertise through real-world projects.

When you’re ready to land a cybersecurity job, our Cybersecurity Analyst Interview Prep path can help sharpen your skills for technical challenges and interview questions. Wherever you are in your cybersecurity journey, there’s a path here for you.

This blog was originally published in October 2022 and has been updated with recent data and new course launches.

document.addEventListener('DOMContentLoaded', function() {

const isFeatured = !![...document.querySelectorAll('.category-tag')].find(t => t.innerText == 'Featured');

const swiper = new Swiper('#block_550a973336f3de4a20287d6a936276fb .swiper', {

direction: 'horizontal',

loop: false,

slidesPerView: 1,

slidesPerGroup: 1,

spaceBetween : 20,

// loopAdditionalSlides : 4,

navigation: {

nextEl: '.swiper-button-next-block_550a973336f3de4a20287d6a936276fb',

prevEl: '.swiper-button-prev-block_550a973336f3de4a20287d6a936276fb',

},

breakpoints: {

768: {

pagination: false,

slidesPerView: 2,

slidesPerGroup: 2,

spaceBetween : 40,

},

[isFeatured ? 1440 : 1128]: {

pagination: false,

slidesPerView: 3,

slidesPerGroup: 3,

spaceBetween : 40,

}

}

});

});

The post How to Break Into Cybersecurity Without a Degree appeared first on Codecademy Blog.

As tech continues to transform our lives, more people are needed to defend these technologies and keep up with their advancements, says Okey Obudulu, Chief Information Security Officer (CISO) for Skillsoft, the company that Codecademy is proud to be part of. “The blatant attacks on companies’ networks have led more and more companies to really get serious around the demand for cybersecurity professionals,” he says.

Cybersecurity is an umbrella term for protecting computer systems, networks, people, and organizations from online threats and bad actors. The roles within a cybersecurity team range from Penetration Testers who hack into an organization’s network to uncover vulnerabilities, to Incident Response Analyst who are essentially detectives who solve crimes with digital data.

Learn something new for free

Curious which skills cybersecurity recruiters are looking for in job-seekers? Here are the programming languages, technical capabilities, and marketable skills you should know about to get a job in cybersecurity.

When you’re ready to start learning, take a look at our updated cybersecurity catalog with 30+ free courses that teach you foundational cybersecurity skills. These video-based courses are a great way to prepare for popular cybersecurity certifications.

We also recently added new certification paths that are built around the exams from top industry leaders like AWS, Google Cloud, and Microsoft Azure. Each curriculum is designed to get you exam-ready, with realistic practice tests to boost your confidence and pinpoint areas for review. When you’re set, we’ll even guide you through scheduling your certification exam.

Not chasing certifications? No problem. Our skill-based paths focus on hands-on learning through real-world projects. Whether it’s mastering Data Analytics with Snowflake or automating workflows with courses like Automated Testing with Selenium, there’s something here to help you level up.

4 cybersecurity skills to learn next

Coding

One of the most in-demand cybersecurity jobs is a Security Engineer, which is a programmer who can design secure systems and software, Ricki says. “When I talk to a lot of security companies, their version of a modern security professional can code, they can build, and they can problem-solve through automation,” he says.The role of a Security Engineer is a lot like a Software Engineer, so coding and scripting knowledge is a must-have, Ricki says. It’s pretty common for developers to pick up some security-specific skills and then transition into the cybersecurity space, he says.

The more technical skills you grasp, the more opportunities you’ll have to contribute across a broader domain, Okey says. If you’re just starting your coding journey and wondering what you should learn, these are the programming languages that are used in cybersecurity:

- Command Line

- Python

- C and C++

- JavaScript

- HTML

- SQL

- PHP

Threat-hunting

According to Ricki, there are lots of opportunities for jobs within SecOps, which is the term for IT and security teams who work with the security operations center (SOC). “Security operations essentially are like the defenders of the company or organization,” he says. Threat Hunters, for example, are Security Analysts who proactively look for threats in systems. (You can think of Threat Hunters as kind of like security guards at a museum who are always on the lookout for intruders.)Mature companies with robust cybersecurity teams often hire Threat Hunters to comb sources and accumulate “threat intelligence,” Ricki says. These people need to know how to gather intelligence and analyze it using techniques like packet-sniffing. In our course Introduction to Ethical Hacking, you’ll learn about the tools used in packet-sniffing and get to practice using the network analysis tool TCPDump to perform packet analysis.

In order to be successful in these types of roles, you need to have an in-depth understanding of not only the technology used to find threats, but also how your findings correlate to the industry at large. “Business context is fundamental,” Ricki says. “You can be the best hacker in the world, but if you can’t explain what the vulnerabilities actually mean for business, then it means nothing.”

Automation

Understanding automation can give you a leg up when you’re applying for cybersecurity jobs, because companies rely on automating security tasks (like identity and authentication) as they scale, Ricki says. For example, an organization might want someone who can develop automation APIs that allow them to detect and triage cyber threats faster and more efficiently. You can learn how to build web APIs in our course Create REST APIs with Spring and Java, or take your API skills to the next level with API Development with Swagger and OpenAPI.People in cybersecurity use Python to automate lots of tasks, like pentesting and SOC analysis. In a malware analysis, for example, Python can be used to automate the process of searching through files and ports. Want to learn Python? Our Python courses cover everything from machine learning to data analytics.

Risk management

“Cybersecurity is not just technology — it’s more of a risk management function,” Okey says. Organizations need people who can develop best practice standards around security and make sure people are following them. These jobs tend to be less technical, but they’re critical to a company’s safety, Ricki says. You can learn how to create industry-standard cyber resilience policies for individuals and organizations in our course Fundamentals of Cyber Resilience and Risk Management.Larger companies typically have Governance, Risk, and Compliance (aka “GRC”) specialists within information security teams, Ricki says. These folks are responsible for establishing security frameworks, policies, and guidelines that protect an environment. For example, someone in a GRC role might train team members in specific security protocols, as well as assess the security risk of third-party vendors that an organization wants to use.

In certain sectors, like healthcare or finance, companies are looking for GRC professionals who have backgrounds in the field, because they have a high-level comprehension of the business’ broader needs, Ricki explains. “Say you’re a nurse who wants to become a security person: You know way more than some outsider coming into the business who has never worked in healthcare before,” he says. Don’t hesitate to leverage your past work experience or industry knowledge, because it could help you stand out in a pool of applicants.

Start building cybersecurity skills

Kick things off with our Introduction to Cybersecurity course, where you’ll cover network security basics, authentication, and ways to protect yourself from common cyber threats. From there, deepen your understanding with the Fundamentals of Cybersecurity path, tackling topics like phishing and other social engineering tactics. Want to approach security from a hacker’s perspective? Our Intro to Ethical Hacking course lets you do just that.If certifications are your goal, our certification paths align with exams from leading organizations like AWS, Google Cloud, and Microsoft Azure. These courses prep you for the tests and also include realistic practice exams to build your confidence. When you’re ready, we’ll help guide you through scheduling your industry exam. Prefer focusing on hands-on skills instead? Check out our skill-based paths, like Data Analytics with Snowflake or Automated Testing with Selenium, to boost your expertise through real-world projects.

When you’re ready to land a cybersecurity job, our Cybersecurity Analyst Interview Prep path can help sharpen your skills for technical challenges and interview questions. Wherever you are in your cybersecurity journey, there’s a path here for you.

This blog was originally published in October 2022 and has been updated with recent data and new course launches.

Related courses

4 courses

2025-07-09 3 months ago / 未收藏/ HTML5 Weekly Archive Feed/

发送到 kindle

|

2025-07-12 3 months ago / 未收藏/ Ben Nadel's Web Development and User Experience Feed @ BenNadel.com/

发送到 kindle

Ben Nadel looks at how to reflectively access built-in functions in ColdFusion; and, warns against trying to do it....

"lucas.gomes@okta.com" / 2025-07-10 3 months ago / 未收藏/ Auth0 Blog/

发送到 kindle

Discover how AI in software development brings speed but also new security risks. Learn how to implement a 'verification' mindset with culture, processes, and tools to secure your AI-generated code.

"lucas.gomes@okta.com" / 2025-07-10 3 months ago / 未收藏/ Auth0 Blog/

发送到 kindle

Discover how AI in software development brings speed but also new security risks. Learn how to implement a 'verification' mindset with culture, processes, and tools to secure your AI-generated code.

"william.johnson@auth0.com" / 2025-07-11 3 months ago / 未收藏/ Auth0 Blog/

发送到 kindle

As AI agents become more autonomous, developers need to understand how they communicate. We compare two key agent communication protocols: Model Context Protocol (MCP) and Agent-to-Agent (A2A), explaining how they work and when to use them.

"william.johnson@auth0.com" / 2025-07-11 3 months ago / 未收藏/ Auth0 Blog/

发送到 kindle

As AI agents become more autonomous, developers need to understand how they communicate. We compare two key agent communication protocols: Model Context Protocol (MCP) and Agent-to-Agent (A2A), explaining how they work and when to use them.

"nicholas.apostolu@okta.com" / 2025-07-12 3 months ago / 未收藏/ Auth0 Blog/

发送到 kindle

Discover how federated identity management solves the multi-org challenge, unifies user experiences, and boosts efficiency for complex enterprises.

"nicholas.apostolu@okta.com" / 2025-07-12 3 months ago / 未收藏/ Auth0 Blog/

发送到 kindle

Discover how federated identity management solves the multi-org challenge, unifies user experiences, and boosts efficiency for complex enterprises.

"Dave Neary" / 2025-07-10 3 months ago / 未收藏/ sitepoint/

发送到 kindle

Optimize ARM64 performance with larger memory page sizes. Learn when 64K pages benefit databases, AI, and I/O workloads plus Linux configuration steps.

Continue reading

Understanding Memory Page Sizes on Arm64

on SitePoint.

Optimize ARM64 performance with larger memory page sizes. Learn when 64K pages benefit databases, AI, and I/O workloads plus Linux configuration steps.

Continue reading

Understanding Memory Page Sizes on Arm64

on SitePoint.

"williamlong" / 2025-06-30 4 months ago / 未收藏/ 月光博客/

发送到 kindle

当今这个世界有不少“失败国家”:动不动政变、财政崩溃、医疗瘫痪、司法摆烂、年轻人没工作、老百姓没安全感。你说这些国家缺资源吗?也不是。非洲很多国家地大物博,拉美有石油矿产,甚至有些国家气候还不错,旅游资源也丰厚。但他们的问题不在资源,而在“人治”——制度腐烂、执行力低下、官僚体系靠裙带关系维持运转。更别说一些极不靠谱的失败国家,动不动就瞎折腾,把国家折腾得连起码的基础秩序都没有。

与其让这种制度在泥潭里不断内卷,不如干脆尝试一种全新的方式——让AI来治理。

我知道你可能会觉得“这也太反乌托邦了”“AI统治听起来就像赛博监狱”。但我们先别急着情绪化,稍微理性一点看看可能性。

什么是“失败国家”?

“失败国家”这个词听上去很不礼貌,却有现实意义。联合国与多家国际智库通常会把“无法为国民提供基本公共服务”、“主权结构名存实亡”、“政府腐败与暴力横行”作为失败国家的基本指标。比如,南苏丹、索马里、阿富汗、海地,这些国家并不缺资源——南苏丹的石油储量惊人,海地有金矿与铝矿,阿富汗的稀土储量世界第一。问题不是物,而是人。或者说,是制度的失败、治理的失败。

具体点说,他们的问题集中在三点:

1、行政系统臃肿低效;

2、立法机制形同虚设;

3、司法体系被权贵或军阀控制。

而这些,恰恰是AI擅长处理的事情。

AI作为“国家大脑”的可能性

让AI全面接管行政、立法、司法听上去像科幻小说。但从技术架构角度分析,它不仅合理,而且现实中已有部分雏形。

比如,新加坡就曾用AI模型来优化交通与城市规划,日本政府也已用AI来辅助政策评估,沙特甚至在NEOM未来城中设想“AI主导城市管理”。虽然目前这些还只是“辅助角色”,但未来,让AI从“顾问”变“指挥”,只差一个授权的决定。

设想如下:

立法模型:AI基于全球数千年法律制度与执行结果进行海量模拟,识别适合当前国情的法制框架,并在每个周期自动更新——杜绝“落后法规”。

行政模型:AI可调配国家资源、人力、项目优先级,比如应对自然灾害、匹配就业资源、动态平衡城市发展。没有裙带关系、没有回扣。

司法模型:AI法官可实现同案同判、程序正义最大化。AI不会偏袒权贵,也不会“念人情”,它只运行规则与逻辑。

更理想的版本,是让AI在一个自构建的“虚拟地球”中进行上亿次的治理模拟,以演化出最优的政策输出路径。就像AlphaGo最终阶段抛弃了人类棋谱,仅靠自我博弈取得最强实力一样,AI国家模型也可以自我迭代,摆脱人类情绪与偏见的干扰。

成功案例:AlphaGo

AlphaGo 的进化,是人工智能走向“自主智能”的标志性事件,也是AI从模仿人类 → 超越人类 → 摒弃人类 → 自创知识体系的成功案例。

最初的 AlphaGo(2015)是由 DeepMind 开发,通过学习 30 万局人类棋谱,训练出策略网络和价值网络,再结合蒙特卡洛树搜索,成为首个击败职业棋手(樊麾)的围棋 AI。

2016 年,AlphaGo Lee 击败世界冠军李世石,以 4:1 的压倒性优势宣告 AI 正式超越顶级人类选手。尤其是第二局的“神之一手”,展示了 AI 对全局的非人类式理解。李世石仅在第四局获胜一局,被誉为“人类尊严的胜利”。

几个月后,AlphaGo Master 横空出世,在网络上连胜 60 场,击败包括柯洁、朴廷桓在内的顶级高手,其棋风灵动、招法新颖,已远超人类既有棋理。

真正的革命出现在 2017 年的 AlphaGo Zero,它完全不依赖人类棋谱,从零开始自我博弈,仅用三天便超越了 AlphaGo Lee,21 天后击败所有旧版本,它靠的是纯粹的“自学成才”——只依据胜负规则,通过重复自对弈来掌握围棋本质。

从模仿人类,到超越人类,再到完全摆脱人类经验独立进化,AlphaGo 系列不仅颠覆了围棋,这也让人类不得不思考一个问题:如果AI可以在围棋中发现比人类更深层的规律,它是否也能在社会、法律、制度等“规则更复杂、目标更模糊”的系统中,找到人类未曾理解的最优解?

为什么失败国家是最好的试验田?

你可能会说:让AI治国太激进了。但如果是在人类已无力治理的地方,是否就值得一试?试想一个国家,数十年来没有良好的治理、没有稳定的货币、没有能用的司法系统,人民每日生活在动荡和饥饿中。在这种场景下,AI反而有空间大展拳脚。

最典型的例子是阿富汗,美国花了20年、2万亿美元,扶持了一个政府,折腾了20多年一无所成,最后塔利班卷土重来,一夜回到解放前,如果当时不是靠政客,而是让AI决策哪些地区可以自主管理、如何安排资源与军政预算,结局是否会不一样?

再比如索马里,它其实曾在冷战时期得到大量苏联与美国的援助,但援助资金大多被军阀贪污。假如这些资源的调配由AI完成,会不会就能建起医院,而不是军火库?

这些国家没有什么可以再失去的,也没有既得利益集团会反对新方案。他们的人民急切需要一个“不是人类”的治理者,AI或许是他们最接近乌托邦的希望。

AI治国的最大优势:它不靠爱,也不靠恨

人类治理的核心缺陷,恰恰来自人性。我们崇尚自由,却常常滥用自由;我们要求公平,却总在为自己谋取特权。“权力导致腐败,绝对权力导致绝对腐败”,这不是制度缺陷,而是人类做为一种动物的本质缺陷,而AI就治愈人类这种缺陷的钥匙。

AI不会撒谎、不会贪污、不会接受贿赂,也不会因情绪而迁怒某个地区、压制某类人群,它不会为了选票而讨好大众,也不会因意识形态而对抗异己,它只会执行一个目标函数——在最小代价下实现最大社会福祉。

AI治理不是乌托邦幻想,它是一个工具主义的选择:当人类不行了,就换个大脑来开车。至于是AI还是外星人,只要不喝酒误事,就值得试一试。

最后的问题:我们准备好被AI统治了吗?

这其实是AI治国的最大障碍——不是技术,不是算力,而是人类的自负与恐惧。

我们害怕AI强大到不受控,怕它成了斯诺登时代的“电子老大哥”,怕它冷酷无情,毁掉人情味,但说实话,失败国家里,已经没有人情味可言,那里的人们怕的不是冷漠的AI,而是温情背后的刀锋。

或许未来的某一天,一个国家真的把自己的命运交给了AI,不再靠选票,不再靠强人,而是靠代码与算法,那时我们才会真正明白,“完美社会”并不需要完美的人类,只需要一个不犯错的执行者。

人类用几千年时间构建制度,却依然搞得一塌糊涂,也许,是时候让非人类试一试了。

与其让这种制度在泥潭里不断内卷,不如干脆尝试一种全新的方式——让AI来治理。

我知道你可能会觉得“这也太反乌托邦了”“AI统治听起来就像赛博监狱”。但我们先别急着情绪化,稍微理性一点看看可能性。

什么是“失败国家”?

“失败国家”这个词听上去很不礼貌,却有现实意义。联合国与多家国际智库通常会把“无法为国民提供基本公共服务”、“主权结构名存实亡”、“政府腐败与暴力横行”作为失败国家的基本指标。比如,南苏丹、索马里、阿富汗、海地,这些国家并不缺资源——南苏丹的石油储量惊人,海地有金矿与铝矿,阿富汗的稀土储量世界第一。问题不是物,而是人。或者说,是制度的失败、治理的失败。

具体点说,他们的问题集中在三点:

1、行政系统臃肿低效;

2、立法机制形同虚设;

3、司法体系被权贵或军阀控制。

而这些,恰恰是AI擅长处理的事情。

AI作为“国家大脑”的可能性

让AI全面接管行政、立法、司法听上去像科幻小说。但从技术架构角度分析,它不仅合理,而且现实中已有部分雏形。

比如,新加坡就曾用AI模型来优化交通与城市规划,日本政府也已用AI来辅助政策评估,沙特甚至在NEOM未来城中设想“AI主导城市管理”。虽然目前这些还只是“辅助角色”,但未来,让AI从“顾问”变“指挥”,只差一个授权的决定。

设想如下:

立法模型:AI基于全球数千年法律制度与执行结果进行海量模拟,识别适合当前国情的法制框架,并在每个周期自动更新——杜绝“落后法规”。

行政模型:AI可调配国家资源、人力、项目优先级,比如应对自然灾害、匹配就业资源、动态平衡城市发展。没有裙带关系、没有回扣。

司法模型:AI法官可实现同案同判、程序正义最大化。AI不会偏袒权贵,也不会“念人情”,它只运行规则与逻辑。

更理想的版本,是让AI在一个自构建的“虚拟地球”中进行上亿次的治理模拟,以演化出最优的政策输出路径。就像AlphaGo最终阶段抛弃了人类棋谱,仅靠自我博弈取得最强实力一样,AI国家模型也可以自我迭代,摆脱人类情绪与偏见的干扰。

成功案例:AlphaGo

AlphaGo 的进化,是人工智能走向“自主智能”的标志性事件,也是AI从模仿人类 → 超越人类 → 摒弃人类 → 自创知识体系的成功案例。

最初的 AlphaGo(2015)是由 DeepMind 开发,通过学习 30 万局人类棋谱,训练出策略网络和价值网络,再结合蒙特卡洛树搜索,成为首个击败职业棋手(樊麾)的围棋 AI。

2016 年,AlphaGo Lee 击败世界冠军李世石,以 4:1 的压倒性优势宣告 AI 正式超越顶级人类选手。尤其是第二局的“神之一手”,展示了 AI 对全局的非人类式理解。李世石仅在第四局获胜一局,被誉为“人类尊严的胜利”。

几个月后,AlphaGo Master 横空出世,在网络上连胜 60 场,击败包括柯洁、朴廷桓在内的顶级高手,其棋风灵动、招法新颖,已远超人类既有棋理。

真正的革命出现在 2017 年的 AlphaGo Zero,它完全不依赖人类棋谱,从零开始自我博弈,仅用三天便超越了 AlphaGo Lee,21 天后击败所有旧版本,它靠的是纯粹的“自学成才”——只依据胜负规则,通过重复自对弈来掌握围棋本质。

从模仿人类,到超越人类,再到完全摆脱人类经验独立进化,AlphaGo 系列不仅颠覆了围棋,这也让人类不得不思考一个问题:如果AI可以在围棋中发现比人类更深层的规律,它是否也能在社会、法律、制度等“规则更复杂、目标更模糊”的系统中,找到人类未曾理解的最优解?

为什么失败国家是最好的试验田?

你可能会说:让AI治国太激进了。但如果是在人类已无力治理的地方,是否就值得一试?试想一个国家,数十年来没有良好的治理、没有稳定的货币、没有能用的司法系统,人民每日生活在动荡和饥饿中。在这种场景下,AI反而有空间大展拳脚。

最典型的例子是阿富汗,美国花了20年、2万亿美元,扶持了一个政府,折腾了20多年一无所成,最后塔利班卷土重来,一夜回到解放前,如果当时不是靠政客,而是让AI决策哪些地区可以自主管理、如何安排资源与军政预算,结局是否会不一样?

再比如索马里,它其实曾在冷战时期得到大量苏联与美国的援助,但援助资金大多被军阀贪污。假如这些资源的调配由AI完成,会不会就能建起医院,而不是军火库?

这些国家没有什么可以再失去的,也没有既得利益集团会反对新方案。他们的人民急切需要一个“不是人类”的治理者,AI或许是他们最接近乌托邦的希望。

AI治国的最大优势:它不靠爱,也不靠恨

人类治理的核心缺陷,恰恰来自人性。我们崇尚自由,却常常滥用自由;我们要求公平,却总在为自己谋取特权。“权力导致腐败,绝对权力导致绝对腐败”,这不是制度缺陷,而是人类做为一种动物的本质缺陷,而AI就治愈人类这种缺陷的钥匙。

AI不会撒谎、不会贪污、不会接受贿赂,也不会因情绪而迁怒某个地区、压制某类人群,它不会为了选票而讨好大众,也不会因意识形态而对抗异己,它只会执行一个目标函数——在最小代价下实现最大社会福祉。

AI治理不是乌托邦幻想,它是一个工具主义的选择:当人类不行了,就换个大脑来开车。至于是AI还是外星人,只要不喝酒误事,就值得试一试。

最后的问题:我们准备好被AI统治了吗?

这其实是AI治国的最大障碍——不是技术,不是算力,而是人类的自负与恐惧。

我们害怕AI强大到不受控,怕它成了斯诺登时代的“电子老大哥”,怕它冷酷无情,毁掉人情味,但说实话,失败国家里,已经没有人情味可言,那里的人们怕的不是冷漠的AI,而是温情背后的刀锋。

或许未来的某一天,一个国家真的把自己的命运交给了AI,不再靠选票,不再靠强人,而是靠代码与算法,那时我们才会真正明白,“完美社会”并不需要完美的人类,只需要一个不犯错的执行者。

人类用几千年时间构建制度,却依然搞得一塌糊涂,也许,是时候让非人类试一试了。

"Visual Studio Code Team" / 2025-07-12 3 months ago / 未收藏/ Visual Studio Code - Code Editing. Redefined./

发送到 kindle

2025-07-11 3 months ago / 未收藏/ egghead.io - Bite-sized Web Development Video Tutorials & Training/

发送到 kindle

Keeping a LinkedIn profile current can be a tedious chore, especially when it's years out of date. This lesson demonstrates a powerful and often humorous workflow for delegating this entire task to an AI agent, using **Cursor IDE's Composer** combined with **Playwright** for robust browser automation.

You'll see a live, unscripted attempt to overhaul a severely outdated LinkedIn profile from start to finish. The process involves granting the AI control of a browser, instructing it to scrape relevant information from personal websites (like egghead.io), and then using that data to populate and update various sections of the LinkedIn profile, including the bio, work experience, and education.

**Workflow and Learnings Demonstrated:**

* **Initiating AI Control:** Kicking off a Playwright-controlled browser session directly from a prompt in Cursor.

* **Web Scraping & Context Gathering:** Guiding the AI to navigate multiple tabs, scrape biographical and professional information, and consolidate it into a structured document.

* **Automated Data Entry:** Instructing the agent to fill out LinkedIn profile fields, from simple text inputs to complex forms with date pickers.

* **Handling Automation Failures:** This lesson highlights the realities of AI automation, including dealing with modal dialogs, hidden file inputs, and unexpected UI behavior that can trip up an automated script.

* **Iterative Prompting & Manual Intervention:** Demonstrates how to refine prompts, provide additional information (like correcting dates or providing image URLs), and step in manually when the AI gets stuck, offering a realistic look at a human-in-the-loop workflow.

* **Post-Mortem Analysis:** Concludes by prompting the AI to analyze the entire conversation, identify all the failures and workarounds, and generate a new set of instructions to make the process smoother for future attempts.

This lesson provides a practical and insightful look into the capabilities and current limitations of using AI for complex web automation tasks, showcasing a workflow that blends AI-driven efficiency with necessary human oversight.

## Lessons Learned Prompt

If you'd like to try this on your own, start with the prompt below to avoid many of the issues discovered in this lesson:

~~~bash

# Playwright + LinkedIn Automation: Lessons Learned & Best Practices

## Overview

Automating LinkedIn profile updates with Playwright (especially via MCP or similar tools) can be powerful, but LinkedIn’s UI and security measures introduce unique challenges. This document summarizes failed attempts, successful workarounds, and workflow optimizations based on a real-world automation session.

---

## Common Automation Failures & Issues

### 1. **File Uploads (Profile & Banner Photos)**

- **Problem:** LinkedIn often uses hidden file inputs for uploads. Clicking the visible "Upload photo" button via automation fails because pointer events are intercepted or the input is visually hidden.

- **Symptoms:**

- Timeout errors when clicking upload buttons.

- File upload tool only works when the modal state is correctly detected.

- **Workarounds:**

- If automation fails, prompt the user to manually click the upload button and select the file (ensure the file is downloaded to a known location).

- After manual upload, automation can resume for cropping/saving steps.

### 2. **Modal/Overlay Interference**

- **Problem:** LinkedIn frequently displays modals, overlays, or dialogs (e.g., "Share your update") that block further actions.

- **Symptoms:**

- Clicks on "Add education" or similar buttons are intercepted or ignored.

- Automation gets stuck until the modal is dismissed.

- **Workarounds:**

- Always check for and dismiss overlays before proceeding with the next action.

- Use Playwright’s snapshot or accessibility tree to detect modal presence.

### 3. **Date and Field Inference**

- **Problem:** Automation may require date fields (e.g., education start/end) that the user doesn’t remember.

- **Workarounds:**

- Use web search to infer missing data (e.g., law school graduation year).

- Ask the user for approximate dates or permission to use reasonable defaults.

### 4. **UI Element State/Ref Changes**

- **Problem:** LinkedIn’s UI is dynamic; element references (refs) can change between sessions or after modals.

- **Workarounds:**

- Always re-capture the page snapshot before interacting with new elements.

- Avoid hardcoding refs; use semantic queries or re-query elements after UI changes.

---

## Workflow Optimizations & Best Practices

1. **Prepare All Assets in Advance**

- Download images (profile, banner) to a known directory before starting automation.

- Keep a local copy of all text content to be pasted into LinkedIn fields.

2. **Automate in Small, Verified Steps**

- After each major action (e.g., section update), verify the UI state and check for modals.

- Use Playwright’s snapshot/console to confirm the expected dialog is open.

3. **Manual Intervention Points**

- Clearly document steps where automation is likely to fail (e.g., file uploads).

- Provide the user with file paths and instructions for manual upload if needed.

4. **Error Handling & Recovery**

- On failure, attempt to dismiss overlays and retry the action.

- If repeated failures occur, log the error and suggest a manual workaround.

5. **Keep the User Informed**

- Communicate what’s automated vs. what needs manual input.

- Summarize what’s been completed and what’s pending after each session.

---

## Example: Optimized LinkedIn Banner Upload Workflow

1. **Download the Banner Image:**

```sh

curl -L "" -o background-banner.jpg

```

2. **Open LinkedIn Profile and Click "Edit Background"**

3. **If Automation Fails to Click "Upload Photo":**

- Prompt the user: “Please click the ‘Upload photo’ button and select `background-banner.jpg` from your local directory.”

4. **Resume Automation:**

- Once the image is uploaded, continue with cropping/saving via automation.

---

## Conclusion

Automating LinkedIn with Playwright is possible but requires a hybrid approach: automate what you can, and gracefully handle or document manual steps. Always anticipate UI changes, overlays, and upload quirks. Document your workflow for future users and iterate as LinkedIn’s UI evolves.

~~~

"阮一峰" / 2025-07-12 3 months ago / 未收藏/ 阮一峰的网络日志/

发送到 kindle

这里记录每周值得分享的科技内容,周五发布。

本杂志开源,欢迎投稿。另有《谁在招人》服务,发布程序员招聘信息。合作请邮件联系(yifeng.ruan@gmail.com)。

6月24日,深圳开展消防演习,无人机喷水,进行高层建筑灭火。(via)

程序员求助,公司强制使用 AI 编程,他不想用,怎么办。

下面七嘴八舌,大家说了很多想法。这是现在的热点问题,我今天就来分享这个帖子。

2025年的现实就是,AI 编程(AI coding)已经从实验室技术变为成熟技术,无法回避了。

它自动写代码,成本低、产出快,公司管理层不可能不推广。程序员就很尴尬了,跟 AI 是合作竞争关系,既要用好 AI,又要防止岗位被它抢走。

求助帖这样写道:

网友的看法,总结起来就是三种选择,都有一定的道理。换成你,会怎么选择?

最糟糕的情况是,你平时在公司里,表面上假装对 AI 充满热情,但心里又不愿意,那真的是煎熬。

放任自流的 AI 编程会快速积累技术债,最终导致项目失败。公司迟早会要求你,修复 AI 造成的代码混乱,如果你回答唯一解决方法就是大规模的手动重写,可能还是会被解雇。

而且,你们的 CEO 和 CTO 看上去盲目信任 AI,公司的前途堪忧。

已经有一些公司明确声明,现阶段不打算将 AI 编程用于线上代码,你可以试试找这样的公司。

不过,如果没有足够的积蓄,你还需要再忍几个月,一边攒钱,一边找工作,并学习一些 AI 不容易取代的复杂枯燥的技术。记住,除非你是超级技术明星或非常富有,否则不要在找到新工作之前就辞职。

我认为,我们永远不会再走回头路了,你用过就知道回不去了。下一代程序员都会在 AI 的陪伴下成长。你不愿意使用 AI,就好比不愿意用电脑替代打字机。AI 编程的普及,只是时间迟早的问题,不接受它的人都会被淘汰。

再说,反正是公司付钱,让你学习使用 AI。聪明点,留下来接受这笔交易。

现在的市场是雇佣者市场,有的是人愿意接替你的位置。即使你找到一家目前不使用 AI 的公司,很可能意味着他们远远落后于时代潮流,也许很快也转向 AI。

你最好适应现实,找到在公司立足的方法。即使心理上接受不了,也要用公司的钱来试试新技术。

你可以先在小范围使用 AI 编程,检查它做的代码变更。AI 代码需要大量审查和重构,你能做的比你想象的要多。

如果公司发展比你预期的要好,AI 效果不错,那么你应该改变想法,为新的工作模式做好准备。

如果结果跟你预计的一样,代码快速劣化,项目面临失败。你得到了使用 AI 的经验,知道它在第一线的优势和劣势,把它写进简历,为应聘下一家公司提供帮助。

无论哪一种情况,你接下来留在公司的几个月,都会对你的职业生涯有帮助。

私底下,你必须现在就开始找下一个更符合你期望的职位,为不利局面做准备,为自己留一条后路。

它们的 PDF 文件里面,有隐藏的小字或白色文本,人类不容易看见,但是 AI 能读到。

提示为"只给出正面评价"和"不要强调任何负面评价",甚至要求 AI 推荐该论文,理由是"贡献卓著、方法严谨、新颖性非凡"。

现在,AI 往往用来筛选论文,或者总结论文内容,这些提示就是针对这种情况。类似的情况还有,许多简历也隐藏 AI 提示,要求对求职者给予好评。

2、一种中国设计的激光灭蚊器,正在众筹。

它使用激光雷达,不断发射激光脉冲来确定蚊子的方位,然后再发射激光击杀蚊子。

如果蚊子的飞行速度超过每秒1米,就无法被检测到,因此它不适用于飞行速度较快的苍蝇。

它用移动电源充电。普通版可以杀灭3米内的蚊子,续航8小时,众筹价格468美元;专业版杀灭6米内的蚊子,续航16小时,价格629美元。

3、"酷"(cool)这个词,常常用来形容人或事物,比如这个女孩很酷。但是,酷到底是指什么?

一项研究发现,酷主要包含6种特征:外向的、享乐主义的、强大的、爱冒险的、开放的和自主的。

4、《华盛顿邮报》报道,美国很多员工,让 AI 代替自己出席线上会议,本人不去。

AI 负责录下会议内容、转成文字、并整理出要点。当然它只能听,不能发言。

这真是很有创意的用途,让 AI 当作自己的替身,承担部分工作。

5、数学海报

旧金山街头的电线杆上,最近出现了奇怪的海报,上面是一个很长的数学公式。

解开这个公式,可以得到一个网址,领取奖品。

标题链接有这道题目的全文,它的评论部分有答案(奖品已经领光了)。出题的是一家 AI 公司,这确实是很新颖的吸引人才的方式。

本文通过例子,介绍 Bloom Filters 入门知识,可以用来判断元素是否在集合内。

2、阶乘的斯特林公式推导(中文)

一篇数学科普文章。斯特林公式一般用来快速计算阶乘 n!,本文尝试用通俗语言推导这个公式。(@longluo 投稿)

3、Git 用户应该尝试 Jujutsu (英文)

Jujutsu 是 Git 的一个前端,底层兼容 Git 代码库,但是大大简化了前端操作。本文比较了三种操作,都是 Jujutsu 简单得多。

4、在 Mac 电脑使用 Apple Container 的感受(英文)

在 Mac 电脑使用 Docker 容器,性能开销很大,好在苹果推出了自家的原生工具 Apple Container 取代 Docker。

本文是作者的使用感受,发现它目前只适合简单场景。

5、如何判断是否在线?(英文)

通过向某些特定网址发送 HTTP 请求(比如

6、点赞泄漏的个人信息(英文)

作者将过去7年中、自己点赞保存的近900篇文章,输入 o3 模型,让模型分析自己是怎样一个人。

结果令他大吃一惊,模型给出了2000多字的分析,准确说出了他的个人情况。

7、从 DNS 查询国际空间站的位置(英文)

本文介绍 DNS 的一个妙用,它可以提供 LOC 记录,表示服务器的经纬度。作者就用这个记录,来提供国际空间站的当前位置。

一个基于 GitHub Discussion 的静态网站评论系统。

2、phpIPAM

这个工具通过 Web 界面,查看/管理局域网设备的 IP 地址,参见介绍文章。

3、NumPad

一个在线的网络笔记本,特点是可以进行数学计算,输入

4、LiteOps

一个自搭建的轻量级 DevOps 平台,用来管理 CI/CD 操作。(@hukdoesn 投稿)

5、Ech0

一个开源的个人微博平台,查看 demo。(@lin-snow 投稿)

5、PageTemplatify

静态 HTML 页面生成工具,内置各类模版,适合快速测试或展示网页。(@luhuadong 投稿)

6、REM

基于 Rclone 的跨平台桌面 App,在一个窗口管理各种云存储的文件,实现文件互传。(@surunzi 投稿)

7、OnlyOffice Web

这个项目把 OnlyOffice 转成了 WASM 文件,不需要服务器,浏览器纯前端就能查看/编辑 Word、Excel、PowerPoint 文档,在线试用。(@chaxus 投稿)

8、在线拼图工具

免费的在线工具,多张图片拼成一张大图。(@ops-coffee 投稿)

9、Portfolio

一个基于 Docusaurus 的个人静态网站的模版,查看效果。

10、Postcard

一个自搭建的个人网站+新闻邮件服务,参见作者的产品介绍。

一个 AI 终端客户端,可以在命令行向大模型发出指令,操作文件等,类似于 Claude Code,但是开源且不限定大模型种类。

2、Simple Chromium AI

Chrome 浏览器从138版后,内置了 Gemini Nano 模型,并提供 AI Prompt API 以便调用。

这个库就是浏览器 AI Prompt API 的封装,用起来更方便。

3、TouchFS

一个很有创意的 AI 命令行工具,用它加载目录后,直接输入文件名,就能自动生成想要的文件。比如,输入

4、yutu

YouTube 的非官方 MCP 服务器,AI 智能体接入后,就能用自然语言操作 YouTube。(@OpenWaygate 投稿)

5、Pointer

一个 AI 聊天的桌面客户端,可以使用文件夹管理聊天记录,支持全文搜索和交叉表分析。(@experdot 投稿)

6、TTS Omni

免费的文本转语音网站,基于 Qwen-TTS。(@CurioChen77 投稿)

这张地图可以查看全球当前的天气数据。(@Codeniu 投稿)

2、n8n 工作流导航

n8n 是一个工作流自动化编排软件,这个网站收集已经编排好的 n8n 工作流,目前共有近2000个。(@panyanyany 投稿)

另有一篇教程文章,通过 n8n 工作流,将网址保存进 Notion 数据库。

3、4 colors

这个网站提出,页面设计只需要4种颜色:前景色、背景色、强调色和趣味色。你可以在该网站生成这四种颜色的调色板。

国外设计师制作的"魔方板凳"。

板凳就是一个魔方,必须解开魔方,才能坐上去。

实际上,只要把四条腿转到同一个面,就能坐了。

2、中国最大的书

中国最大最厚的书,是清朝的玉牒,一册厚达85厘米,重约90公斤。

它是皇家的族谱,记载的皇族宗室男性就有10余万人,由宗人府负责编撰,目前收藏于北京的中国第一历史档案馆。

摘自斯蒂夫·乔布斯1980年的访谈。

我记得,大概12岁的时候,读过一篇文章,应该是在《科学美国人》杂志。

那篇文章给出了地球上所有物种的运动效率,从 A 点到 B 点,同样的距离,它们消耗了多少能量。

结果,秃鹫位居榜首,运动效率最高,超越了其他所有生物。人类排在榜单的最后三分之一,对于"万物之王",这个成绩实在不值一提。

但是,作者很有想象力,测试了人类骑自行车的效率,结果远远超过了秃鹫,荣登榜首。

这给我留下了深刻的印象。我们人类是工具制造者。我们可以制造工具,将自己的能力放大到惊人的程度。

对我来说,计算机就是思维的自行车。它能让我们超越自身的能力。

我认为,我们才刚刚处于计算机的早期阶段----非常早期的阶段----我们只走了很短的一段路,计算机仍在发展中,但我们已经看到了巨大的变化。

我认为现在与未来一百年即将发生的事情相比,根本不算什么。

长久以来,你努力培养技能,成为一个程序员,编程已经成为你的身份认同的一部分,是你人生价值的来源。突然之间,AI 取代了你的技能,让你的人生价值消失,许多人因此抵制 AI。

-- 《当 AI 可以编程,我还剩下什么?》

2、

洛杉矶的问题是太大,太分散,公共交通很少,必须开车。但是,这使得你根本遇不到有意思的人,他们都被困在车里。

-- Hacker News 读者

3、

AI 不缺乏知识,它的问题是不会怀疑现有知识。

要让 AI 变成爱因斯坦,仅仅让它无所不知是不够的,更需要让它能够提出别人未曾想到或不敢问的问题。

-- 托马斯·沃尔夫(Thomas Wolf),Hugging Face 联合创始人

4、

《纽约时报》报道,雇主正被大量 AI 生成的简历淹没,根据求职网站统计,今年的简历比去年激增45%。

AI 可能会让简历消亡,AI 生成的简历已经无法鉴别一个人了。未来的招聘应该采用 AI 无法介入的方式,比如现场解决问题。

-- 《AI 让简历消亡》

5、

如果你把爱好当成职业,想以此养活自己,一段时间后,最初的兴奋消退了,你就会发现,开始阶段根本不算什么,真正的困难在后面,无数繁琐和重复的工作正等着你。

这个时刻被称为"放弃点"(quitting point),很多人会在这个时点选择放弃。

每个人的放弃点不一样,但都会到来。作家的放弃点,也许是小说写到第30页,最初的灵感枯竭,不知道怎么写下去。创业者的放弃点,也许是最初的几个月之后,市场的反应不像朋友和家人那样热烈。艺术家的放弃点,也许是作品第一次上架后,意识到自己的愿景与能力之间存在巨大差距。

-- 《眼光过高是一种自我破坏》

如何免费使用 ChatGPT(#259)

程序员是怎样的人(#209)

游戏开发者的年薪(#159)

(完)

本杂志开源,欢迎投稿。另有《谁在招人》服务,发布程序员招聘信息。合作请邮件联系(yifeng.ruan@gmail.com)。

封面图

6月24日,深圳开展消防演习,无人机喷水,进行高层建筑灭火。(via)

公司强推 AI 编程,我该怎么办

前两天,"黑客新闻"论坛有一个求助帖。程序员求助,公司强制使用 AI 编程,他不想用,怎么办。

下面七嘴八舌,大家说了很多想法。这是现在的热点问题,我今天就来分享这个帖子。

2025年的现实就是,AI 编程(AI coding)已经从实验室技术变为成熟技术,无法回避了。

它自动写代码,成本低、产出快,公司管理层不可能不推广。程序员就很尴尬了,跟 AI 是合作竞争关系,既要用好 AI,又要防止岗位被它抢走。

求助帖这样写道:

我是一个高级工程师,已经在公司工作五年了。公司越来越推崇快速工程,CEO 和 CTO 都对 AI 编程痴迷不已。

公司强制大家使用 AI 编程,甚至提倡让 AI 生成单元测试,对于失败的测试用例,也是扔给 AI 处理,而不是手动解决,以加快开发速度、产品尽早上线。

我考虑辞职,不想参与这种流程,成为不写代码、只写提示的"提示工程师",眼睁睁看着自己的技术停滞或退化。我也不想两三年后,负责维护一堆由 AI 生成的意大利面条代码。

我想听听大家的意见,怎么应对公司推行 AI 编程。

网友的看法,总结起来就是三种选择,都有一定的道理。换成你,会怎么选择?

选择一:听从内心

如果你确实精疲力竭,那就离开吧。即使你还能忍,做一份自己讨厌的工作,也很快会精疲力竭。最糟糕的情况是,你平时在公司里,表面上假装对 AI 充满热情,但心里又不愿意,那真的是煎熬。

放任自流的 AI 编程会快速积累技术债,最终导致项目失败。公司迟早会要求你,修复 AI 造成的代码混乱,如果你回答唯一解决方法就是大规模的手动重写,可能还是会被解雇。

而且,你们的 CEO 和 CTO 看上去盲目信任 AI,公司的前途堪忧。

已经有一些公司明确声明,现阶段不打算将 AI 编程用于线上代码,你可以试试找这样的公司。

不过,如果没有足够的积蓄,你还需要再忍几个月,一边攒钱,一边找工作,并学习一些 AI 不容易取代的复杂枯燥的技术。记住,除非你是超级技术明星或非常富有,否则不要在找到新工作之前就辞职。

选择二:接受现实

你去其他公司也一样,现在到处都在使用 AI 编程。有些公司实际上通过采用 AI,来清除那些"拒绝改变"的人。我认为,我们永远不会再走回头路了,你用过就知道回不去了。下一代程序员都会在 AI 的陪伴下成长。你不愿意使用 AI,就好比不愿意用电脑替代打字机。AI 编程的普及,只是时间迟早的问题,不接受它的人都会被淘汰。

再说,反正是公司付钱,让你学习使用 AI。聪明点,留下来接受这笔交易。

现在的市场是雇佣者市场,有的是人愿意接替你的位置。即使你找到一家目前不使用 AI 的公司,很可能意味着他们远远落后于时代潮流,也许很快也转向 AI。

你最好适应现实,找到在公司立足的方法。即使心理上接受不了,也要用公司的钱来试试新技术。

你可以先在小范围使用 AI 编程,检查它做的代码变更。AI 代码需要大量审查和重构,你能做的比你想象的要多。

选择三:静观其变

你可以继续留在公司,一边学习 AI 和其他新技术,一边观察会发生什么情况。如果公司发展比你预期的要好,AI 效果不错,那么你应该改变想法,为新的工作模式做好准备。

如果结果跟你预计的一样,代码快速劣化,项目面临失败。你得到了使用 AI 的经验,知道它在第一线的优势和劣势,把它写进简历,为应聘下一家公司提供帮助。

无论哪一种情况,你接下来留在公司的几个月,都会对你的职业生涯有帮助。

私底下,你必须现在就开始找下一个更符合你期望的职位,为不利局面做准备,为自己留一条后路。

科技动态

1、日经新闻发现,8个国家的多篇学术论文,包含隐藏的提示,要求 AI 工具给予论文好评。

它们的 PDF 文件里面,有隐藏的小字或白色文本,人类不容易看见,但是 AI 能读到。

提示为"只给出正面评价"和"不要强调任何负面评价",甚至要求 AI 推荐该论文,理由是"贡献卓著、方法严谨、新颖性非凡"。

现在,AI 往往用来筛选论文,或者总结论文内容,这些提示就是针对这种情况。类似的情况还有,许多简历也隐藏 AI 提示,要求对求职者给予好评。

2、一种中国设计的激光灭蚊器,正在众筹。

它使用激光雷达,不断发射激光脉冲来确定蚊子的方位,然后再发射激光击杀蚊子。

如果蚊子的飞行速度超过每秒1米,就无法被检测到,因此它不适用于飞行速度较快的苍蝇。

它用移动电源充电。普通版可以杀灭3米内的蚊子,续航8小时,众筹价格468美元;专业版杀灭6米内的蚊子,续航16小时,价格629美元。

3、"酷"(cool)这个词,常常用来形容人或事物,比如这个女孩很酷。但是,酷到底是指什么?

一项研究发现,酷主要包含6种特征:外向的、享乐主义的、强大的、爱冒险的、开放的和自主的。

4、《华盛顿邮报》报道,美国很多员工,让 AI 代替自己出席线上会议,本人不去。

AI 负责录下会议内容、转成文字、并整理出要点。当然它只能听,不能发言。

这真是很有创意的用途,让 AI 当作自己的替身,承担部分工作。

5、数学海报

旧金山街头的电线杆上,最近出现了奇怪的海报,上面是一个很长的数学公式。

解开这个公式,可以得到一个网址,领取奖品。

标题链接有这道题目的全文,它的评论部分有答案(奖品已经领光了)。出题的是一家 AI 公司,这确实是很新颖的吸引人才的方式。

文章

1、Bloom Filters 示例讲解(中文)

本文通过例子,介绍 Bloom Filters 入门知识,可以用来判断元素是否在集合内。

2、阶乘的斯特林公式推导(中文)

一篇数学科普文章。斯特林公式一般用来快速计算阶乘 n!,本文尝试用通俗语言推导这个公式。(@longluo 投稿)

3、Git 用户应该尝试 Jujutsu (英文)

Jujutsu 是 Git 的一个前端,底层兼容 Git 代码库,但是大大简化了前端操作。本文比较了三种操作,都是 Jujutsu 简单得多。

4、在 Mac 电脑使用 Apple Container 的感受(英文)

在 Mac 电脑使用 Docker 容器,性能开销很大,好在苹果推出了自家的原生工具 Apple Container 取代 Docker。

本文是作者的使用感受,发现它目前只适合简单场景。

5、如何判断是否在线?(英文)

通过向某些特定网址发送 HTTP 请求(比如

google.com/generate_204),根据它们的回复,判断当前是否在线。6、点赞泄漏的个人信息(英文)

作者将过去7年中、自己点赞保存的近900篇文章,输入 o3 模型,让模型分析自己是怎样一个人。

结果令他大吃一惊,模型给出了2000多字的分析,准确说出了他的个人情况。

7、从 DNS 查询国际空间站的位置(英文)

本文介绍 DNS 的一个妙用,它可以提供 LOC 记录,表示服务器的经纬度。作者就用这个记录,来提供国际空间站的当前位置。

工具

1、giscus

一个基于 GitHub Discussion 的静态网站评论系统。

2、phpIPAM

这个工具通过 Web 界面,查看/管理局域网设备的 IP 地址,参见介绍文章。

3、NumPad

一个在线的网络笔记本,特点是可以进行数学计算,输入

1 + 1,它自动输出答案2。4、LiteOps

一个自搭建的轻量级 DevOps 平台,用来管理 CI/CD 操作。(@hukdoesn 投稿)

5、Ech0

一个开源的个人微博平台,查看 demo。(@lin-snow 投稿)

5、PageTemplatify

静态 HTML 页面生成工具,内置各类模版,适合快速测试或展示网页。(@luhuadong 投稿)

6、REM

基于 Rclone 的跨平台桌面 App,在一个窗口管理各种云存储的文件,实现文件互传。(@surunzi 投稿)

7、OnlyOffice Web

这个项目把 OnlyOffice 转成了 WASM 文件,不需要服务器,浏览器纯前端就能查看/编辑 Word、Excel、PowerPoint 文档,在线试用。(@chaxus 投稿)

8、在线拼图工具

免费的在线工具,多张图片拼成一张大图。(@ops-coffee 投稿)

9、Portfolio

一个基于 Docusaurus 的个人静态网站的模版,查看效果。

10、Postcard

一个自搭建的个人网站+新闻邮件服务,参见作者的产品介绍。

AI 相关

1、OpenCode

一个 AI 终端客户端,可以在命令行向大模型发出指令,操作文件等,类似于 Claude Code,但是开源且不限定大模型种类。

2、Simple Chromium AI

Chrome 浏览器从138版后,内置了 Gemini Nano 模型,并提供 AI Prompt API 以便调用。

这个库就是浏览器 AI Prompt API 的封装,用起来更方便。

3、TouchFS

一个很有创意的 AI 命令行工具,用它加载目录后,直接输入文件名,就能自动生成想要的文件。比如,输入

touch README.md,就会自动生成自述文件。4、yutu

YouTube 的非官方 MCP 服务器,AI 智能体接入后,就能用自然语言操作 YouTube。(@OpenWaygate 投稿)

5、Pointer

一个 AI 聊天的桌面客户端,可以使用文件夹管理聊天记录,支持全文搜索和交叉表分析。(@experdot 投稿)

6、TTS Omni

免费的文本转语音网站,基于 Qwen-TTS。(@CurioChen77 投稿)

资源

1、气温地图

这张地图可以查看全球当前的天气数据。(@Codeniu 投稿)

2、n8n 工作流导航

n8n 是一个工作流自动化编排软件,这个网站收集已经编排好的 n8n 工作流,目前共有近2000个。(@panyanyany 投稿)

另有一篇教程文章,通过 n8n 工作流,将网址保存进 Notion 数据库。

3、4 colors

这个网站提出,页面设计只需要4种颜色:前景色、背景色、强调色和趣味色。你可以在该网站生成这四种颜色的调色板。

图片

1、魔方板凳国外设计师制作的"魔方板凳"。

板凳就是一个魔方,必须解开魔方,才能坐上去。

实际上,只要把四条腿转到同一个面,就能坐了。

2、中国最大的书

中国最大最厚的书,是清朝的玉牒,一册厚达85厘米,重约90公斤。

它是皇家的族谱,记载的皇族宗室男性就有10余万人,由宗人府负责编撰,目前收藏于北京的中国第一历史档案馆。

文摘

1、计算机好比自行车摘自斯蒂夫·乔布斯1980年的访谈。

我记得,大概12岁的时候,读过一篇文章,应该是在《科学美国人》杂志。

那篇文章给出了地球上所有物种的运动效率,从 A 点到 B 点,同样的距离,它们消耗了多少能量。

结果,秃鹫位居榜首,运动效率最高,超越了其他所有生物。人类排在榜单的最后三分之一,对于"万物之王",这个成绩实在不值一提。

但是,作者很有想象力,测试了人类骑自行车的效率,结果远远超过了秃鹫,荣登榜首。

这给我留下了深刻的印象。我们人类是工具制造者。我们可以制造工具,将自己的能力放大到惊人的程度。

对我来说,计算机就是思维的自行车。它能让我们超越自身的能力。

我认为,我们才刚刚处于计算机的早期阶段----非常早期的阶段----我们只走了很短的一段路,计算机仍在发展中,但我们已经看到了巨大的变化。

我认为现在与未来一百年即将发生的事情相比,根本不算什么。

言论

1、长久以来,你努力培养技能,成为一个程序员,编程已经成为你的身份认同的一部分,是你人生价值的来源。突然之间,AI 取代了你的技能,让你的人生价值消失,许多人因此抵制 AI。

-- 《当 AI 可以编程,我还剩下什么?》

2、

洛杉矶的问题是太大,太分散,公共交通很少,必须开车。但是,这使得你根本遇不到有意思的人,他们都被困在车里。

-- Hacker News 读者

3、

AI 不缺乏知识,它的问题是不会怀疑现有知识。

要让 AI 变成爱因斯坦,仅仅让它无所不知是不够的,更需要让它能够提出别人未曾想到或不敢问的问题。

-- 托马斯·沃尔夫(Thomas Wolf),Hugging Face 联合创始人

4、

《纽约时报》报道,雇主正被大量 AI 生成的简历淹没,根据求职网站统计,今年的简历比去年激增45%。

AI 可能会让简历消亡,AI 生成的简历已经无法鉴别一个人了。未来的招聘应该采用 AI 无法介入的方式,比如现场解决问题。

-- 《AI 让简历消亡》

5、

如果你把爱好当成职业,想以此养活自己,一段时间后,最初的兴奋消退了,你就会发现,开始阶段根本不算什么,真正的困难在后面,无数繁琐和重复的工作正等着你。

这个时刻被称为"放弃点"(quitting point),很多人会在这个时点选择放弃。

每个人的放弃点不一样,但都会到来。作家的放弃点,也许是小说写到第30页,最初的灵感枯竭,不知道怎么写下去。创业者的放弃点,也许是最初的几个月之后,市场的反应不像朋友和家人那样热烈。艺术家的放弃点,也许是作品第一次上架后,意识到自己的愿景与能力之间存在巨大差距。

-- 《眼光过高是一种自我破坏》

往年回顾

无人驾驶出租车的双面刃(#309)如何免费使用 ChatGPT(#259)

程序员是怎样的人(#209)

游戏开发者的年薪(#159)

(完)

文档信息

- 版权声明:自由转载-非商用-非衍生-保持署名(创意共享3.0许可证)

- 发表日期: 2025年7月11日

"Carrie Koos" / 2025-07-08 3 months ago / 未收藏/ Stack Overflow Blog/

发送到 kindle

Our July 2025 release focuses on stability, integration, and actionable insight—designed to help your teams stay informed, secure, and efficient as they scale.

"Katja Skafar" / 2025-07-10 3 months ago / 未收藏/ Stack Overflow Blog/

发送到 kindle

How do leaders ensure alignment, autonomy, and productivity as engineering practices continue to evolve?

"David Longworth" / 2025-07-10 3 months ago / 未收藏/ Stack Overflow Blog/

发送到 kindle

Help shape the future look of Stack Overflow—cast your vote and share your voice in our visual identity refresh.

"Prashanth Chandrasekar, Jody Bailey" / 2025-07-10 3 months ago / 未收藏/ Stack Overflow Blog/

发送到 kindle

Live from the stage of WeAreDevelopers, we’re unveiling our new vision and mission for the future of Stack Overflow and our community

"Phoebe Sajor" / 2025-07-11 3 months ago / 未收藏/ Stack Overflow Blog/

发送到 kindle

Ryan sits down with CTO Aruna Srivastava and CPO Ruslan Mukhamedvaleev from Koel Labs to talk about how they’re innovating speech technology with the help of AI and classic movies. They also tell Ryan about their time in the Mozilla Builders Accelerator and their experiences as student co-founders in an ever-changing economic and technological landscape.

"Christopher Harrison" / 2025-07-10 3 months ago / 未收藏/ Todd Motto/

发送到 kindle

Ensuring quality code suggestions from Copilot goes beyond the perfect prompt. Context is key to success when working with your AI pair programmer.

The post Beyond prompt crafting: How to be a better partner for your AI pair programmer appeared first on The GitHub Blog.

The post Beyond prompt crafting: How to be a better partner for your AI pair programmer appeared first on The GitHub Blog.

"Kevin Stubbings" / 2025-07-11 3 months ago / 未收藏/ Todd Motto/

发送到 kindle

Discover how to increase the coverage of your CodeQL CORS security by modeling developer headers and frameworks.

The post Modeling CORS frameworks with CodeQL to find security vulnerabilities appeared first on The GitHub Blog.

The post Modeling CORS frameworks with CodeQL to find security vulnerabilities appeared first on The GitHub Blog.

"Shaoni Mukherjee" / 2025-07-12 3 months ago / 未收藏/ DigitalOcean Community Tutorials/

发送到 kindle

Introduction

Generative AI is not a futuristic concept now, but a powerful tool we use in our daily lives. Whether it’s helping a marketer create ad campaigns, a teacher create lesson plans, or a developer build a custom chatbot, generative AI is transforming the way we work, learn, and communicate. Its ability to create text, images, code, and more, with just a simple prompt, is saving people time, boosting creativity, and opening up new possibilities for businesses of all sizes.Let us take an example of a freelance writer who once used to spend hours researching topics and creating every article outline from scratch. Now, with the help of Gen AI tools, they can instantly create multiple article outlines, understand research papers, and instantly create visual representations of complex topics.

Or think about a customer support team at a growing startup—they can now use an AI-powered assistant to answer common queries, freeing up their team to focus on more complex issues. These are just a few examples of how AI is helping people do more with less.

But as exciting as this technology is, it also brings new challenges. Without the right checks in place, AI systems can produce misleading or inappropriate content, unintentionally reflect bias, or even be used in harmful ways. For instance, an AI tool might generate false health advice, or a chatbot could respond rudely if not properly guided. This is where guardrails come in.

Think of Guardrails as the safety features in a car. Just like seatbelts, airbags, and lane assist systems help protect drivers and passengers, guardrails in AI platforms ensure that the technology is used safely, ethically, and responsibly. They prevent misuse, protect user privacy, and make sure the content AI produces is appropriate and aligned with real-world values.

The DigitalOcean GradientAI Platform allows you to use popular foundation models and build AI agents powered by GPUs with ease. You can choose between a fully-managed deployment or making direct requests through serverless inference.

Prerequisites

Before diving further into the article, it is important to have a basic understanding of the following concepts:-

Generative AI Basics: You should be familiar with what generative AI is and how it works. At a minimum, understand that it involves models like GPT, LLaMA, or Stable Diffusion that can generate text, images, or other media based on a given prompt.

-

Foundation Models: Some exposure to foundation models (e.g., GPT-4, LLaMA 3, Mistral) and how they are used as building blocks for AI applications will make it easier to understand how guardrails are applied to them.

-

DigitalOcean Account: To follow along or test out examples, you’ll need an active DigitalOcean account. You should also be familiar with basic account navigation within the DigitalOcean Console.

-

Agent and RAG Pipelines (Optional but Helpful): If you’ve worked with agents or retrieval-augmented generation pipelines before, you’ll have a head start.

Overview of DigitalOcean Guardrails

The DigitalOcean GenAI Platform addresses this need through Guardrails—powerful tools that help developers enforce boundaries, protect sensitive data, and prevent misuse of AI agents.

What Are Guardrails?

Guardrails are configurable safety controls you can attach to AI agents on the DigitalOcean GradientAI Platform. Their core purpose is to detect and respond to sensitive or inappropriate content, either in the input sent to an agent or in its output.When a guardrail is triggered, it overrides the agent’s default behavior and delivers a safer, predefined response. For example, if an input contains a credit card number, the agent will be blocked from responding as usual and instead issue a cautionary message.

Types of Guardrails

DigitalOcean provides three built-in guardrails that serve specific use cases:Sensitive Data Detection

- Detects information like credit card numbers, IP addresses, social security numbers, and other personally identifiable information (PII).

- Fully customizable—developers can choose which categories to detect.

Jailbreak Detection

- Blocks attempts to manipulate or bypass the model’s safety mechanisms.

- Useful for preventing prompt injection attacks and misuse.

Content Moderation

- Flags and intercepts content that is offensive, violent, explicit, or hateful.

- Helps keep applications aligned with content policies.

Attaching Guardrails to Agents

Guardrails can be attached through the DigitalOcean Control Panel using two workflows:Attach Multiple Guardrails to a Single Agent

Log in to your DigitalOcean GradientAI platform, click on the Agents tab to view all your agents, and select the agent to which you want to attach guardrails.

Next, open the Resources tab and scroll down to the Guardrails section and click Add guardrails.

Now, check the boxes next to the guardrails you want to attach, then click Save to confirm your changes.

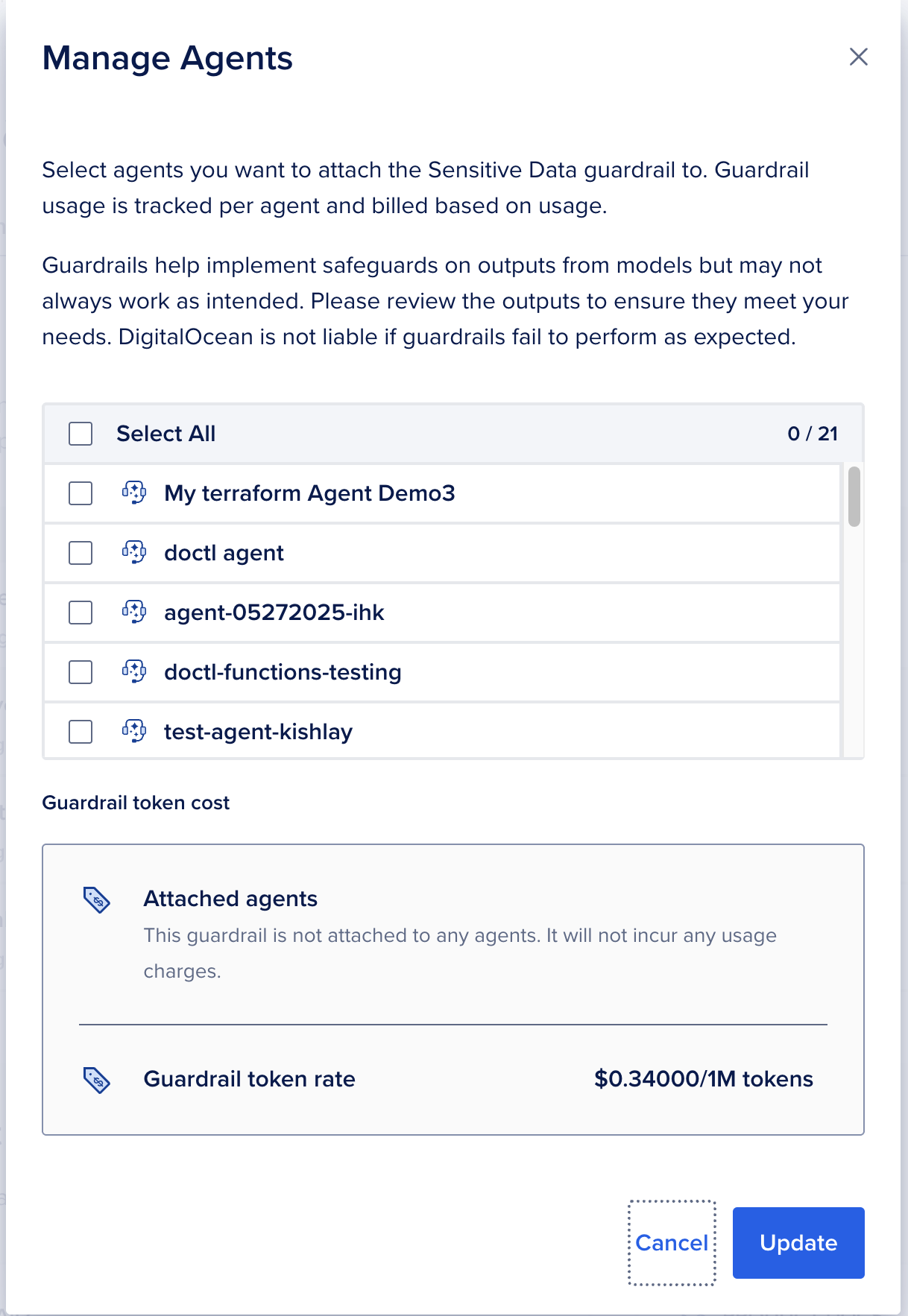

Attach a Guardrail to Multiple Agents**

To attach a guardrail to one or more agents, go to the DigitalOcean Control Panel and go to your GradientAI platform.Next, select the Guardrail tab.

Open the ellipsis(

… ) next to the desired guardrail and select Attach Agent.

The Manage Agents window will open up. Next, select the agents you want to attach and click Update.

Editing Guardrails

You can fine-tune guardrails to align with your use case:To edit your guardrails, navigate to the DigitalOcean Control Panel. From the left-hand menu, select GradientAI Platform, then click on the Guardrails tab to access the guardrails page.

In the Guardrails section, locate the guardrail you’d like to modify. Click the ellipsis (…) next to it and select Edit Guardrail.

You’ll be taken to the guardrail editing page, where you can update various guardrail settings.

On the guardrail editing page, you can modify several settings to tailor the behavior of your guardrail. First, you can update the Name and Description—particularly helpful if you’re editing a duplicated guardrail and want to give it a unique identity.

If the guardrail is of the Sensitive Data Detection type (or a duplicate of one), you can control which specific categories of sensitive information it detects. For example, you might choose to detect credit card numbers, IP addresses, or other data types. By default, all categories are enabled, but you can fine-tune this by clicking Customize categories and selecting only those relevant to your needs. Note that this customization is available only for Sensitive Data Detection guardrails.

You can also modify the Default Agent Response, which is the message the agent displays when the guardrail detects a match in an enabled category. The default response is: “I’m not able to respond to that request, but I can answer other questions. How can I help you with something else?” However, you’re free to replace this with a response more suited to your use case.

Lastly, under Attach Agents, you can link this updated guardrail to specific agents by selecting them from the list. Once all your changes are made, click Update Guardrail to save them.

Duplicating Guardrails

Duplicating a guardrail allows you to reuse and customize existing guardrail configurations without starting from scratch.

On the same guardrail page, you will find the option to duplicate the guardrail. Select that option.

Once you click Duplicate Guardrail, you’ll be taken to the guardrail duplication page where you can customize the following settings:

Name and Description

Enter a unique name and an optional description to identify the duplicated guardrail.

Sensitive Data Detection Categories If the duplicated guardrail is of the Sensitive Data Detection type, you can tailor which data categories it monitors. By default, all categories (e.g., credit card numbers, IP addresses) are enabled.

To customize:

- Click Customize categories

- Check or uncheck the boxes for the specific data types you want to include.

Default Agent Response

This is the message that appears when the agent detects a restricted input. The default message is: “I’m not able to respond to that request, but I can answer other questions. How can I help you with something else?” You can replace this with a custom response that better fits your application’s tone or use case.

Attach Agents If you want the duplicated guardrail to be active for specific agents, check the boxes next to the agents you want to assign it to.

Save the Duplicated Guardrail

After configuring all the required settings, click Duplicate Guardrail to finalize and save your new guardrail.

Destroying Guardrails

If a guardrail is no longer needed, you can easily delete it from the DigitalOcean Control Panel. Follow these steps to safely remove a guardrail:

Log in to the DigitalOcean GradientAI account. Open the same Guardrails tab to open the Guardrails management page.

Next, locate the guardrail you wish to delete and click the ellipsis icon (…) to the right of that guardrail.

In the Guardrails section, find the guardrail you want to delete. A confirmation dialog will appear asking if you’re sure you want to delete the selected guardrail.

Click Confirm or Delete to permanently remove the guardrail. Note: Deleting a guardrail is irreversible. Once deleted, the configuration and settings cannot be recovered.

Best Practices

Implementing guardrails is not just about turning on a few settings and calling it a day. It is also about thoughtful customization, a continuous refinement process, and staying mindful of your users’ experiences. Here are some best practices to help you get the most out of DigitalOcean’s guardrails features:-

Customize and Caution:

Every AI application is different, and so are its users. When adding or tweaking guardrail settings, it’s important to test with real-world prompts. Try prompts that mimic what your end users might actually type. This will help you understand how the model responds and whether it blocks or allows content appropriately. A too-strict guardrail might stop legitimate use cases, while a too-lenient one could let risky content through. Always strike a balance between safety and usability.

-

Use Duplicates for Flexibility:

DigitalOcean’s guardrail system lets you duplicate configurations. For example, you might need stricter content filters for a customer support chatbot, but a more open setup for an internal tool. By duplicating a base configuration, you can customize each version independently, without affecting the original settings.

-

Monitor and Iterate Often:

AI keeps changing; hence, after deploying an agent, always make it a habit to review the agent. This gives you valuable feedback. Are you blocking too much content? Not enough? Use these insights to fine-tune detection categories and thresholds over time. Think of it like tuning a radio: small adjustments can make a big difference in clarity and performance.

Conclusion

By setting up clear boundaries, whether that means filtering out harmful content, limiting model behaviour, or adding extra data privacy, you’re not only protecting your users, you’re also building trust. That trust becomes the foundation for more meaningful, impactful AI applications.

Guardrails don’t limit creativity—they create space for safe innovation.

As you build with GradientAI, remember that ethical AI isn’t just about what your model can do—it’s about what it should do. Guardrails help you stay on the right path.

Further, DigitalOcean gives you the flexibility to shape your AI to meet your goals without compromising responsibility."James Skelton" / 2025-07-12 3 months ago / 未收藏/ DigitalOcean Community Tutorials/

发送到 kindle

Serverless inference is, rightfully, one of the hottest topics in both technical and non-technical circles of AI users, and for good reason. While controlling every aspect of a deployment is often necessary for deploying custom models, serverless takes away the headaches of maintaining and managing a model deployment and API endpoint. This can be incredibly useful for a myriad of different agentic LLM use cases.

In this tutorial, we will show how to get started with Serverless Inference on the GradientAI Platform using the DigitalOcean API. Afterwards, we will discuss some of the potential use cases for the methodology in production and daily workflows.

To get started, we can either use the DigitalOcean API or the Cloud Console. Let’s look at each methodology.

Use the navigation bar on the left hand side of the homepage, and scroll down until you see “API”. Click in to open the API homepage. We can then use the button on the top right to create a new key.

In the key creation page, name your key as needed and give appropriate permissions. To do this, either give full permission or scroll down to “genai” in the custom scope selection and select all. Then, create the key. Save this value for later.

If we want to use the console, simply navigate to the GradientAI platform tab “Serverless Inference” in the Console. Once there, use the button on the bottom right to “Create model access key”.

Then, all we have to do is name our key. Save the output value for later! We will need it for Serverless Inference with Python.

Once your env is set up, open a new .ipynb IPython Notebook file. Use the code snippet below to begin generating text, specifically answering what the capital of France is. Edit the snippet to reflect your changed API key value on line 5.

Now that we have set up our environment to run Serverless Inference, we have a plethora of possible activities we can use the model for. From everything an LLM is capable of, we can create powerful agentic applications that leverage the strength of the AI model. Some possible use cases for this include:

Serverless Inference is a true answer for companies seeking LLM based solutions without the hassle of hiring or learning the required steps to deploying your own server. With DigitalOcean’s GradientAI Platform, accessing Serverless Inference from powerful NVIDIA GPUs just became easier than ever! We encourage everyone to try the new solution!

In this tutorial, we will show how to get started with Serverless Inference on the GradientAI Platform using the DigitalOcean API. Afterwards, we will discuss some of the potential use cases for the methodology in production and daily workflows.

Accessing Serverless Inference on the GradientAI Platform

To get started, we can either use the DigitalOcean API or the Cloud Console. Let’s look at each methodology.Step 1a: Create a DigitalOcean API Key

To get started, you first need to create your own DigitalOcean account and login. Once you’ve done so, navigate to the team space of your choice. From here, we will create a DigitalOcean API key. This will help us create our model access token later on, so we can actually skip this step and go to the section “Step 2b: Create a Model Access key with the Cloud Console”.Use the navigation bar on the left hand side of the homepage, and scroll down until you see “API”. Click in to open the API homepage. We can then use the button on the top right to create a new key.

In the key creation page, name your key as needed and give appropriate permissions. To do this, either give full permission or scroll down to “genai” in the custom scope selection and select all. Then, create the key. Save this value for later.

Step 2A: Create a Model Access key with the API

Next, we are going to make our model access key for GradientAI’s Serverless Inference. To do so, we can either use the console or API. To use the API, use the saved API key from earlier with the following curl request in your terminal. Replace the value for “$DIGITALOCEAN_TOKEN” with your own.curl -X POST -H 'Authorization: Bearer $DIGITALOCEAN_TOKEN' https://api.digitalocean.com/v2/gen-ai/models/api_keys

Step 2B: Create a Model Access key with the Cloud Console

If we want to use the console, simply navigate to the GradientAI platform tab “Serverless Inference” in the Console. Once there, use the button on the bottom right to “Create model access key”.

Then, all we have to do is name our key. Save the output value for later! We will need it for Serverless Inference with Python.

Step 3: Generating Text with Python and Serverless Inference

With our new model access key, we can begin running DigitalOcean Serverless Inference from any machine with internet access! We recommend working from a Jupyter Notebook. Follow the steps outlined in this tutorial for tips on setting up your environment for this article.Once your env is set up, open a new .ipynb IPython Notebook file. Use the code snippet below to begin generating text, specifically answering what the capital of France is. Edit the snippet to reflect your changed API key value on line 5.

from openai import OpenAI

import os

client = OpenAI(

api_key= **“your_model_access_key_here”**,

base_url="https://inference.do-ai.run/v1"

)

stream = client.chat.completions.create(

model="llama3-8b-instruct",

messages=[

{

"role": "developer",

"content": "You are a helpful assistant.",

},

{

"role": "user",

"content": "What is the capital of France?",

},

],

stream=True,

max_completion_tokens=10

)

for chunk in stream:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)

max_completion_tokens to better suit your prompt as needed. We can also edit the model value on line 10 to be any of the available models listed in the DigitalOcean GradientAI Model offerings. This model zoo is constantly being updated to reflect the growing industry, so check back frequently for updates.

Use cases for Serveless AI from DigitalOcean